Using Logistic and Softmax Regression with TensorFlow

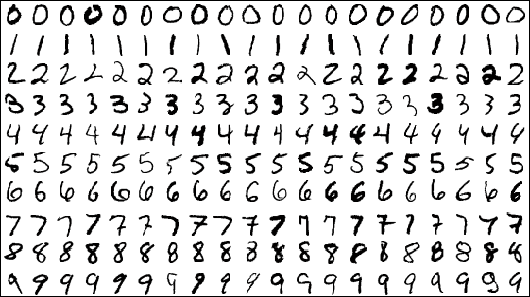

In this TensorFlow tutorial, we train a softmax regression model. The model should be able to look at the images of handwritten digits from the MNIST data set and classify them as digits from 0 to 9. For instance, our model might evaluate an image of a six and be 90% sure it is a six, give a 5% chance to it being an eight, and leave a bit of probability to all the other digits.

The data set is split into three parts: 55,000 data points of training data (mnist.train), 10,000 points of test data (mnist.test), and 5,000 points of validation data (mnist.validation).

Prerequisites

Before running the code from this tutorial, download the input_data.py file and place it into the same folder where softmax regression will be implemented. The script encapsulates the logic that is responsible for downloading the MNIST data set.

To import the data set, proceed as follows:

# Import MNIST data

import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

Building a computational graph

First of all, we should set up graph inputs and outputs. MNIST images are matrices of pixels (28×28). The simplest way to structure I/O data is just to flatten a matrix into a single column vector (28x28 = 784). In this case, an input tensor has the shape of [None, 784], where 784 is a flattened image and None indicates that the number of input images can be of any size.

The graph output is the tensor y with the vector size of 10 entries, where each entry expresses the probability for the image to be a particular digit (0–9) and None indicates that the number of output can be of any size.

# TF graph input

x = tf.placeholder("float", [None, 784]) # MNIST data image of shape 28*28=784

y = tf.placeholder("float", [None, 10]) # 0-9 digits recognition => 10 classesNow let’s define and initialize the weights W and the biases b for our model.

# Set model weights W = tf.Variable(tf.zeros([784, 10])) b = tf.Variable(tf.zeros([10]))

Before Variables can be used, they must be initialized:

# Initializing the variables init = tf.initialize_all_variables()

Model and cost function

For this example, we start with the simplest possible function—a linear mapping:

y = tf.nn.softmax(tf.matmul(x, W) + b)

As the cost function, we use the cross-entropy function:

cost_function = -tf.reduce_sum(y*tf.log(y))

Training a model

To minimize the cross-entropy function, we use the gradient descent method with the step length of 0.01.

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

# Fit training using batch data

sess.run(optimizer, feed_dict={x: batch_xs, y: batch_ys})

Evaluating the model

After training the model, we want to estimate the accuracy of this model. To get accuracy, we need to compare the predicted values with the actual ones in the training data set:

correct_predictions = tf.equal(tf.argmax(model, 1), tf.argmax(y, 1))

Then, compute accuracy:

accuracy = tf.reduce_mean(tf.cast(predictions, "float"))

Finally, we can evaluate our accuracy on the test data.

print "Accuracy:", accuracy.eval({x: mnist.test.images, y: mnist.test.labels})

Running the code

Now, try to run the example source code:

# Import MINST data

import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

import tensorflow as tf

# Set parameters

learning_rate = 0.01

training_iteration = 30

batch_size = 100

display_step = 2

# TF graph input

x = tf.placeholder("float", [None, 784]) # mnist data image of shape 28*28=784

y = tf.placeholder("float", [None, 10]) # 0-9 digits recognition => 10 classes

# Create a model

# Set model weights

W = tf.Variable(tf.zeros([784, 10]))

b = tf.Variable(tf.zeros([10]))

# Construct a linear model

model = tf.nn.softmax(tf.matmul(x, W) + b) # Softmax

# Minimize error using cross entropy

# Cross entropy

cost_function = -tf.reduce_sum(y*tf.log(model))

# Gradient Descent

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost_function)

# Initializing the variables

init = tf.initialize_all_variables()

# Launch the graph

with tf.Session() as sess:

sess.run(init)

# Training cycle

for iteration in range(training_iteration):

avg_cost = 0.

total_batch = int(mnist.train.num_examples/batch_size)

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

# Fit training using batch data

sess.run(optimizer, feed_dict={x: batch_xs, y: batch_ys})

# Compute average loss

avg_cost += sess.run(cost_function, feed_dict={x: batch_xs, y: batch_ys})/total_batch

# Display logs per eiteration step

if iteration % display_step == 0:

print "Iteration:", '%04d' % (iteration + 1), "cost=", "{:.9f}".format(avg_cost)

print "Tuning completed!"

# Test the model

predictions = tf.equal(tf.argmax(model, 1), tf.argmax(y, 1))

# Calculate accuracy

accuracy = tf.reduce_mean(tf.cast(predictions, "float"))

print "Accuracy:", accuracy.eval({x: mnist.test.images, y: mnist.test.labels})As a result, we trained a softmax regression model that is able to look at images of handwritten digits and predict what digits they are.

Further reading

- Implementing Linear Regression with TensorFlow

- Basic Concepts and Manipulations with TensorFlow

- Using k-means Clustering with TensorFlow