Performance Comparison of Ruby Frameworks 2016

The Ruby ecosystem is constantly evolving. There have been many changes in the engineering world since our comparison of Ruby frameworks in 2014. During the two years, we received a few requests from fellow engineers asking for an updated benchmark. So, here is the 2016 edition with more things tested.

What’s under evaluation?

Of course, there are a lot of performance benchmarks on the web. For instance, this one is very good and compares different frameworks as they operate in a viable production configuration.

However, we wanted to understand how Ruby frameworks behave when used as basic solutions with default settings. The idea was to measure only framework speed, not the performance of the whole setup. All the tests were extremely simple, which allowed for comparing almost anything and avoiding side effects caused by very specific optimizations.

So, we implemented bare minimum testing samples for this benchmark and evaluated the performance of:

- Ruby frameworks (compared with Ruby)

- Ruby template engines

- Rack application servers

- Ruby ORM tools

- other languages and frameworks

All of the frameworks and tools were tested in the production mode and with disabled logging. The performance of the technologies was measured, while they were executing the following tasks:

- Languages: Print a “Hello, World!” message.

- Frameworks: Generate a “Hello, World!” web page.

- Template engines: Render a template with one variable.

- Application servers: Run successively five simple apps that carry out one action each, such as using database records, showing environment variables, or just sleeping for a second.

- ORMs: Do different database operations—for example, loading all records with associations, selecting a fragment of a saved JSON object, and updating JSON.

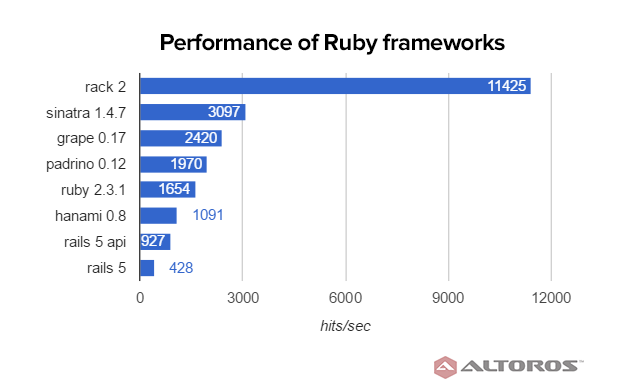

Performance of Ruby frameworks

For comparing Ruby frameworks, I chose the ones that are popular enough and quite actively developed: Grape, Hanami, Padrino, Rack, Ruby on Rails, and Sinatra. If you have been following my series of benchmarks, you might have noticed the absence of Goliath on the list. Sadly, it has been more than two years since its latest release, so I did not include the framework into the tests. For all Rack-based frameworks, Puma was used as an application server.

Benchmarking tool. I began my study from selecting a testing tool, and the search was not in vain—I found GoBench. Really, I liked this tool better than ApacheBench, which hasn’t been updated for 10 years. Wrk is also good for local testing. There are more benchmarking tools available, which even allow you to create a cluster.

To get started with GoBench, I ran the gobench -t 10 -u http://127.0.0.1 command and set the concurrency level to 100 (the -c parameter).

Testing samples. In addition, I prepared a number of “Hello, World!” web applications and used them to measure the number of HTTP requests that the technologies under comparison could serve per second.

Performance results. As you might expect, Rack and Sinatra demonstrated the best results.

A simple, single-thread Ruby server was a bit faster than Rails 5 in API mode, while a modern Rails killer—Hanami—got just a bit better results. In my previous benchmark, Sinatra was three times faster than Rails, and now it is seven times faster. Good progress, Sinatra!

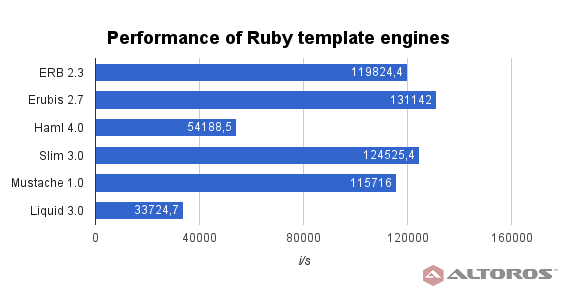

Performance of Ruby template engines

The list of the tested template engines includes ERB, Erubis, Haml, Liquid, Mustache, and Slim. Here is the Ruby script I used to measure their performance—the number of templates that can be compiled per second. The image below sums up the results of testing the template engines speed.

Erubis had the best performance among the tested solutions. Slim, as always, was the most compact, and it was also faster than ERB.

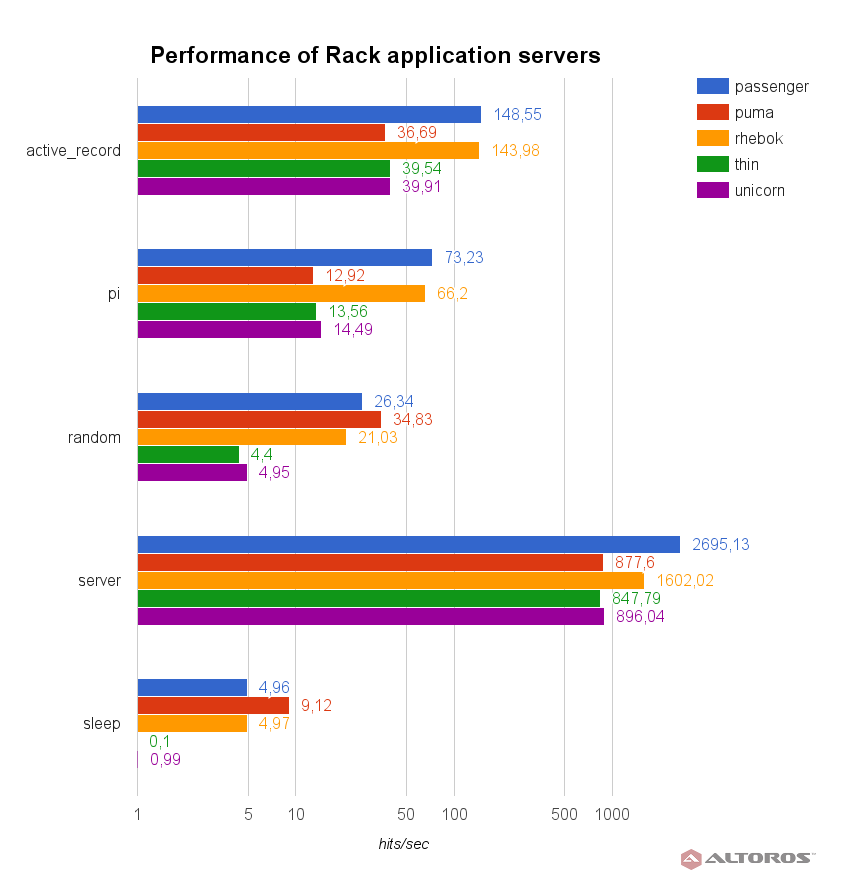

Performance of Rack application servers

Among the Rack application servers included in the benchmark are Phusion Passenger, Puma, Rhebok, Thin, and Unicorn. Similar to the frameworks, we measured the number of HTTP requests served per second when each of the servers was used. You can find the source code of the tests in this GitHub repo.

Five sample applications perform the following tasks:

active_recordshows a list of users from database records.picalculates the pi number.serverlists environment variables.sleepwaits one second before responding.randomselects which application to start:server,sleep, orpi.

As you can see in the image below, the fastest Rack application server was Passenger, and Rhebok came really close to it.

Puma, which was recently made the default server in Rails, won just one test. Compared to my previous benchmark, Unicorn has done a good job and now demonstrates results very similar to Thin.

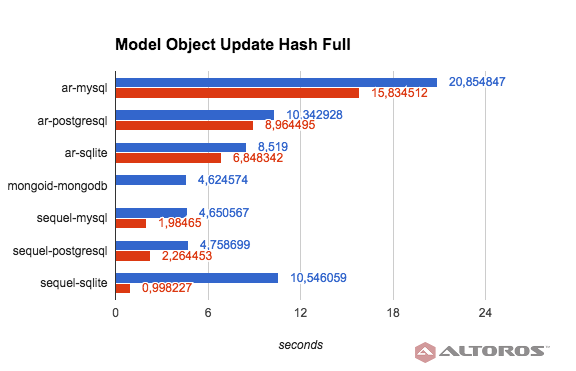

Performance of ORMs

In this part of the benchmark, we focused on ORMs and tested how much time Active Record, Sequel, and Mongoid needed to process a given request.

Seven years ago, Jeremy Evans created an amazing benchmarking tool, simple_orm_benchmark. Our team has updated it and tested Active Record and Sequel with MySQL, PostgreSQL, and SQLite, as well as Mongoid with MongoDB. The six new tests added to the existing 34 are related to the feature common for all the modern databases—the support of JSON types.

The versions used in this benchmark include MySQL 5.7.15, PostgreSQL 9.5.4, SQLite 3.14.2, MongoDB 3.2.9, Active Record 5, Sequel 4.38, Mongoid 6, and Ruby 2.3.1.

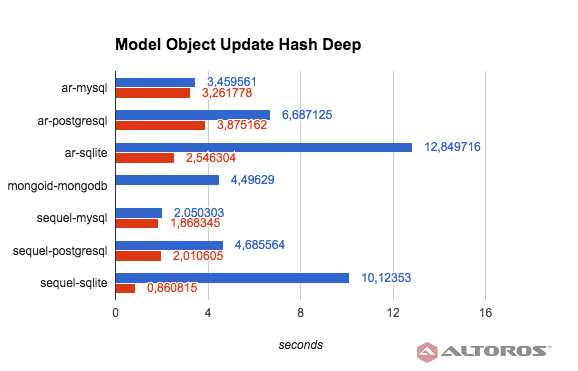

In the diagram below, you will find the most interesting results of the tests (fewer seconds means better performance). Red-colored rows in the images represent measurements with transactions, while blue-colored ones represent those without transactions. You can find all the performance results for the Ruby ORMs in this CSV file.

Pseudocode for the test: Party.where(id: id).update(:stuff=>{:pumpkin=>2, :candy=>1})

The diagram below indicates the time needed to perform the test with updating a JSON field.

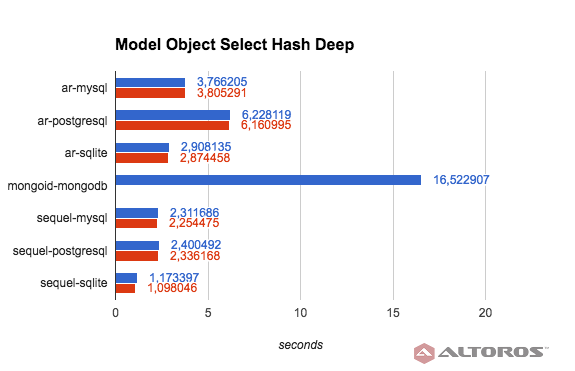

Pseudocode for the test: Party.where(id: id).update_all(["stuff = JSON_SET(stuff, '$.pumpkin', ?)", '2'])

The diagram below illustrates the time needed to perform the test with updating a record by a JSON fragment.

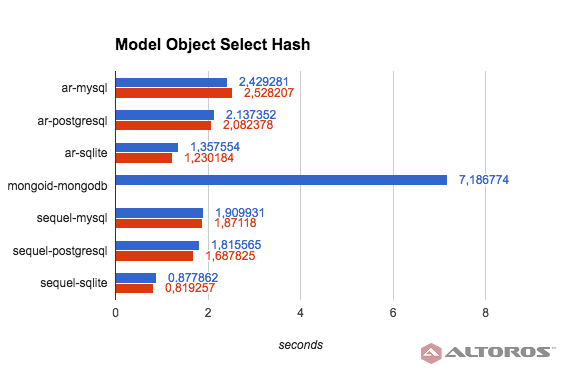

Pseudocode for the test: Party.find_by(:id=>id)

The diagram below demonstrates the time needed to perform the test with selecting records by a specified attribute.

Pseudocode for the test: Party.find_by("stuff->>'$.pumpkin' = ?", '1')

The diagram below illustrates the time needed to perform the test with selecting records by a JSON fragment.

Despite the fact that Active Record announced the support of JSON types, it is not quite true. In reality, the support only means creating a new JSON object painlessly and getting it back from the database. If you want to update the object in the database, it will override the whole object. To update a part of the object or select the object using a part of it, you have to use raw SQL, which is different for every database.

Moreover, for having the support of JSON fields in SQLite, you should compile SQLite with the json1 module enabled via a SQL query. Alternatively, if you do not need Active Record, you can simply use the Amalgalite gem, because the activerecord-amalgalite-adapter gem is obsolete.

| Inserting JSON | Yes | Yes | Yes |

| Reading JSON | Yes | Yes | Yes |

| Supporting JSON types in models | Yes | Yes (except SQLite) | Yes (only for PostgreSQL) |

| Updating a JSON object partially | Yes | Raw SQL | Raw SQL |

| Searching by JSON fragments | Yes | Raw SQL | Raw SQL |

In general, Sequel was faster than Active Record and Mongoid, though the latter is more intuitively clear, and you need less time to implement the tests compared to Active Record and Sequel.

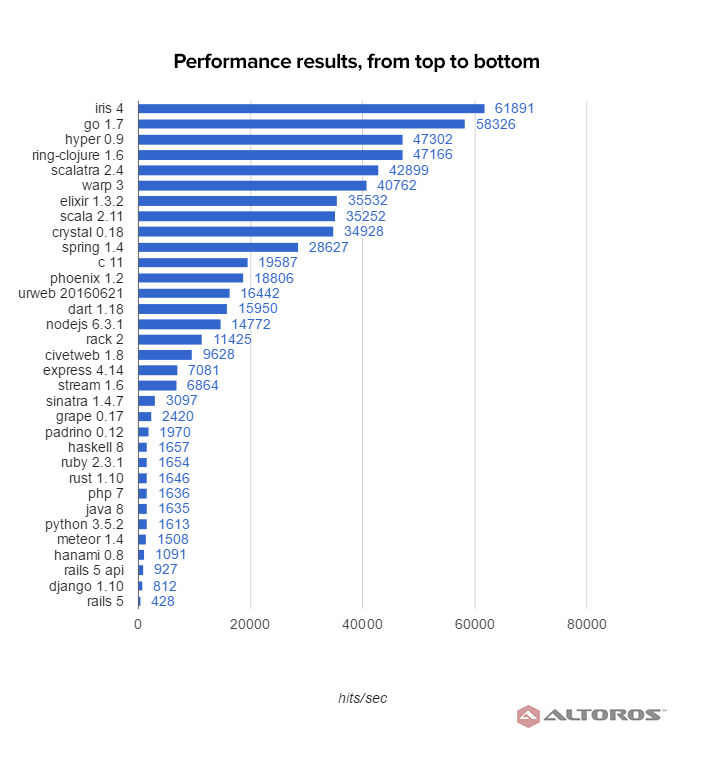

Performance of other languages and frameworks

In the final part of the benchmark, we compared Ruby frameworks with a number of other languages and frameworks, such as Crystal, Python, Elixir, Go, Java, Express, Meteor, Django, Phoenix, and Spring.

Test environment. Similar to the evaluation of Ruby frameworks described earlier, GoBench was used as a testing tool, and the behavior of the frameworks was maximally close to the default. You can check out these “Hello, World” web apps for the testing samples.

Performance results. Below follows the surprising part of this benchmark.

You can see that the Go language and a Go framework Iris are absolute winners that managed to handle about 60,000 requests per second. Their results are followed by Ring (Clojure), Scalatra (Scala), and Warp (Haskell). Elixir, Scala, and Crystal (a Ruby-like language inspired by the speed of C) share the third place. Then follow Spring (Java), Hyper (Rust), C, Phoenix (Elixir), Ur/Web, Dart, and Node.js.

Unfortunately, the Ruby frameworks—Rack, Sinatra, Padrino, Grape, Rails, and Hanami—have the poorest results together with Express (Node.js), Stream (Dart), Haskell, Rust, PHP, Java, Python, Meteor (Node.js), and Django (Python).

Conclusion

The frameworks and tools in this benchmark were configured as close as possible to their default behavior, which essentially allowed to create equal conditions for all of them.

Even though Rack, Sinatra, Padrino, Grape, Rails, and Hanami demonstrated relatively low performance compared to other frameworks in our “Hello, World” benchmark, it does not mean that Ruby is the wrong choice. In real life—when you scale your app horizontally, use multiple workers and threads, and know all the advantages of a framework—the results may be very different. It is also true that your preferences and needs may change at some point. For more details, check out the full performance benchmark.

However, first of all, you should enjoy your language. If you don’t, just try something else.

Further reading

- Performance Comparison of Ruby Frameworks, App Servers, Template Engines, and ORMs (2016)

- Performance Comparison of Ruby Frameworks: Sinatra, Padrino, Goliath, and Ruby on Rails