Kubernetes Cluster Ops: Options for Configuration Management

Managing multiple clusters is a challenge

Operators have had struggles with Kubernetes in the past. Kubernetes v1.0, which made its appearance in 2015, was rudimentary. Yet the pioneers creating on-premises virtualization (aka private cloud) and reaching out to a public cloud (especially AWS then) persisted, as Kubernetes was at least built around the then-revolutionary concept of orchestrating containers and managing clusters.

Today, Kubernetes is evolving into a more full-featured environment and is being deployed in more complex environments. The problem now is when organizations scale the number of Kubernetes clusters deployed, cluster management becomes more complicated.

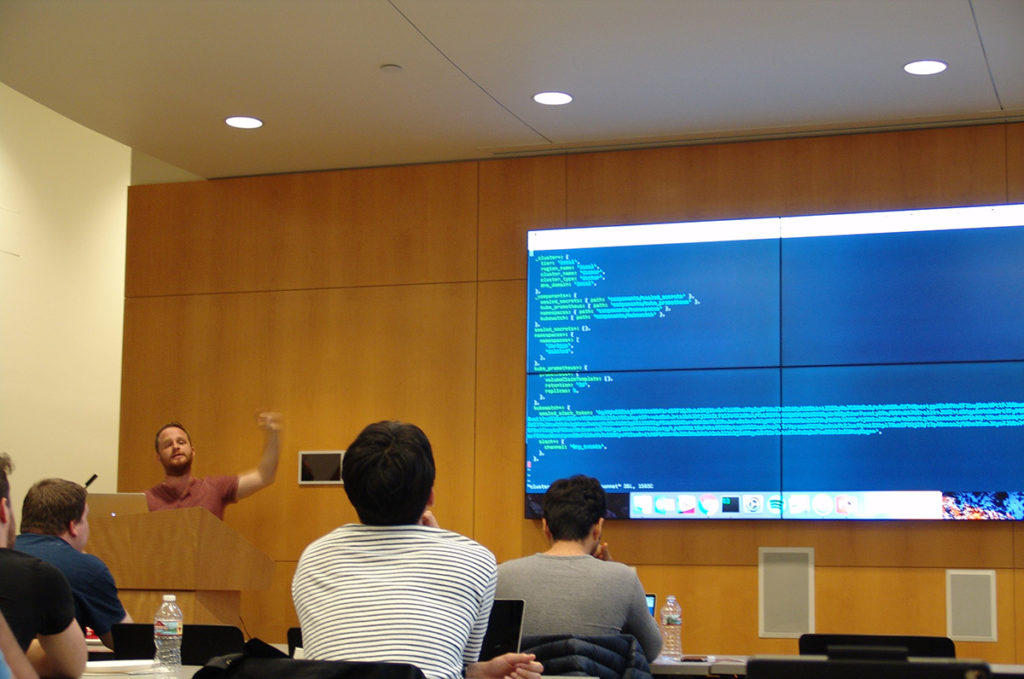

During a recent meetup in Seattle, Lee Briggs of Apptio outlined a journey of the company’s team, in which many options were tested to address the scalability-related issues, and how a homegrown solution ultimately played a key role.

Lee Briggs at the meetup in Seattle (Photo credit: Altoros)

Lee Briggs at the meetup in Seattle (Photo credit: Altoros)

Starting with the usual choices

Today’s scaling challenges are horizontal, accomplished by adding distributed servers throughout an enterprise’s on-prem infrastructure or through public-cloud availability zones. This is in contrast to the vertical scaling found in pre-cloud, mainframe-driven IT (and yes, despite all of the cloud migration over the past decade, legacy scaling is still common).

The Apptio’s team initial challenge was to remove “snowflakes,” i.e., unique servers that have their own specific configurations and were not originally designed to work in synchronized groups. Then, there’s the matter of establishing version control within your server environments and replicating them.

This is where thorns start to appear, as operations people don’t work with just infrastructure, but with the applications that run on it, as well.

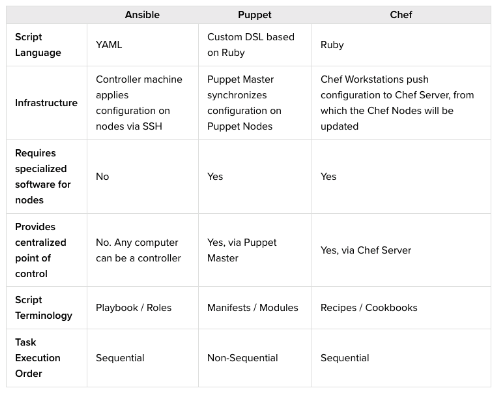

Lee took his audience through the challenges the Apptio team has faced over the past 2.5 years. He started with a comparison of the “big three” configuration management players: Ansible, Puppet, and Chef.

The “Big Three” configuration managers (Image credit)

The “Big Three” configuration managers (Image credit)Simply choosing one of these configuration managers and forging ahead proved to be problematical. First off, with the use of Kubernetes, the configuration level shifts up one level, Lee pointed out, from the individual server level to Kubernetes itself. Kubernetes is API-driven, convergent, and idempotent (consistent), which is all good, until the number of clusters starts to increase.

In Apptio’s case, a large number of clusters also involved a large number of “components” to be handled. Lee’s team defines a component as “a thing that runs on Kubernetes that is needed for a cluster to be useful.” Examples of this in his world include an Ingress Controller, Cluster Autoscaler, Sealed Secrets, RBAC Config, and Prometheus.

So, many aspects of these components vary slightly from cluster to cluster (for instance, SSL certificate paths, endpoint scraping for Prometheus, and namespaces) that another piece of software is needed.

What about Helm?

Optimism returns at this point in the story, as the open-source Helm package manager sails to the rescue. Helm applies “charts” to package resources (including applications).

Helm has begun as an initiative within Kubernetes, an since then it found enough success to be liberated as a standalone project.

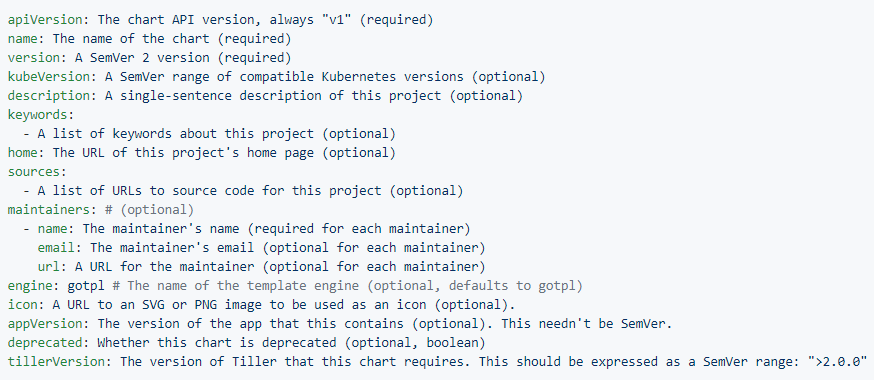

A Helm Chart YAML file (Image credit)

A Helm Chart YAML file (Image credit)However, the Apptio team found out that by using Helm, they were “just moving the problem to another tool.” Furthermore, Helm is currently “a security nightmare,” according to Lee, as it is far too generous in granting access throughout what it manages.

The Helm community addressed this issue with its version 3, but the Apptio team encountered other problems, too.

“The YAML values were different among clusters, and the Golang templates found in Helm become a nightmare when you get sophisticated. Having to range through values with templates became a chore, and once you do have all the config, how do you apply it to multiple clusters?” —Lee Briggs, Apptio

What other options were used?

The objective was thus to leverage the Helm charts available with a module that could do this. The team also had a need to produce the JSON scripting easily. They tried using Helm with Ansible and with Puppet, but couldn’t make things work. Ansible’s Helm model “is very broken,” Lee said, and the Helm module for Puppet needed to be run through every node, and “it just didn’t work.”

So, they moved to many other potential approaches, the first of which was Terraform.

Terraform’s developers describe it as “infrastructure as code,” and it can be thought of in the same group as Ansible, Puppet, and Chef. The Apptio team found that Terraform’s developer created its own HashiCorp Configuration Language, which makes it easy to write JSON scripts. Terraform also has Kubernetes and Helm providers.

Lee Briggs talking about Kubernetes configuration options (Photo credit: Altoros)

Lee Briggs talking about Kubernetes configuration options (Photo credit: Altoros)But, according to Lee, Terraform seemed “extremely buggy,” and the provider communities are not active in addressing such issues. Additionally, Kubernetes is updated frequently, and when this happened, the Apptio team would have to deal manually with bringing all the Terraform code up-to-date.

Along the way, the team discovered Jsonnet, a data templating language that generates config data and extends JSON. It was written by Google specifically to interact with and template JSON and has Golang wrappers so is easy to embed in Go apps. It provided a critical needed, but also called for a framework.

So, the team tried ksonnet, a framework designed to streamline writing to Kubernetes clusters, but found it to be “dramatically over-complicated.” They moved on to kapitan, a templated config management tool for Kubernetes (and Terraform, by the way), but couldn’t find success with that tool, either.

Positive results with a home-brewed tool

Finally, the team decided to take a DIY approach. The result is a solution called kr8 (pronounced “create”). The tool automatically populates Jsonnet external variables, automatically concatenates Jsonnet files, and has the ability to patch Helm charts.

“With kr8, we are attempting to leverage the power of Jsonnet, and render it and other things into usable manifests for each cluster. The tools uses Jsonnet to render YAML, then patches the JSON on top of that.” —Lee Briggs, Apptio

As might be inferred from the scope of this article, kr8 is for operations only. “We don’t want devs to use this process,” Lee said. “This is more for system administrators to build a cluster structure for the platform. Application performance in itself is a completely different topic, and we have other systems for that.”

kr8 is written in Golang, and Apptio plans to open-source it by the end of 2018, pending its internal legal review. The team plans on using the tool to track clusters into new regions, and get their latest states to copy over.

Want details? Watch the video!

Table of contents |

Related slides

Further reading

- Managing Multi-Cluster Workloads with Kubernetes

- Evaluating PCS for Kubernetes Clusters

- A Multitude of Kubernetes Deployment Tools: Kubespray, kops, and kubeadm

About the expert