The Dos and Don’ts of Data Integration

Making an asset of your database

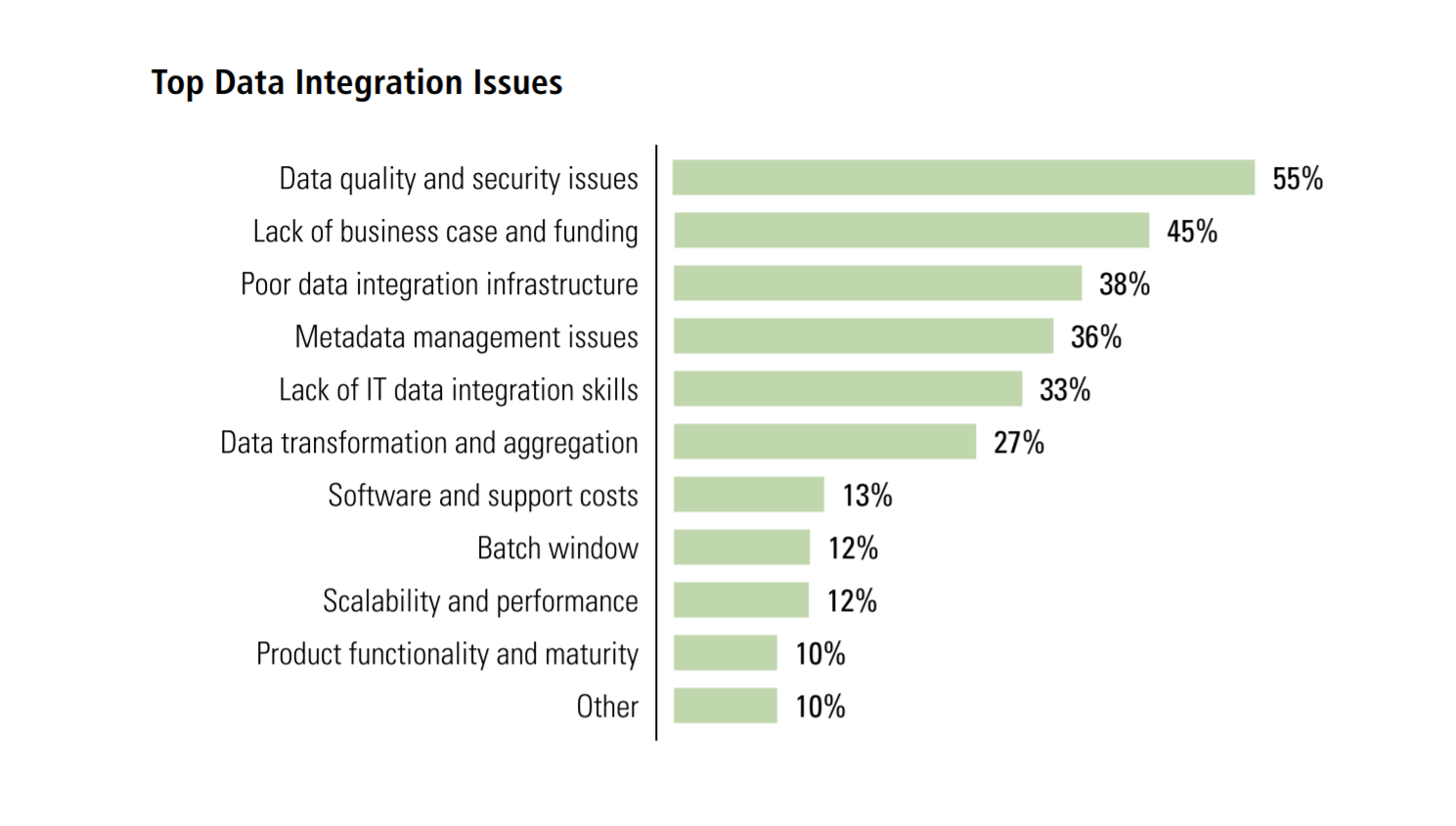

Extracting and normalizing customer data from multiple sources is the biggest challenge with client data management (54%), according to a report the Aberdeen Group. OK, true, a lot of companies consider linking and migrating customer information between databases, files and applications a sticky, if not risky, process to deal with. Gartner says corporate developers spend approximately 65% of their effort building bridges between applications. That much! No wonder they risk losing lots and lots of data, not even mentioning the time and efforts this may involve.

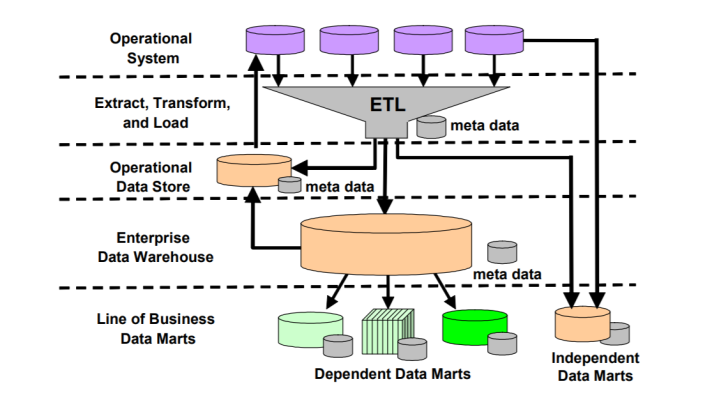

Commonly, a database is an integral part of a data warehouse or other data integration architecture. Failing to properly manage the database may lead to a failure of the whole system.

So, when I came across a piece from Robert L. Weiner on database management, I found it highly relevant. To brush up on your database management strategy, check if you have any of the typical mistakes listed below.

- Lack of specified data entry policies and procedures, hence no one knowing and applying them.

- Using Excel as if it were a database. (JUST a spreadsheet, okay?)

- Having no one in charge of data entry/data quality training.

- Forgetting all about backups, or running them way too seldom.

- Allowing staff to copy sensitive information onto portable devices and take it home.

- Insufficient password security measures.

- Error management? Lack of error management strategies, careless data handling by staff.

- Keeping your user access rights and security options a mess.

Not about you? Then congratulations, your database management efforts don’t need a major fix.

Information life cycle management

Long story short, it is the information that is the most valuabe asset in your data warehouse.

There’s another insightful article by Mike Karp on information life cycle management (ILM) and the six steps of implementing a successful and efficient policy on data storage, verification, classification, and management. Mike identifies the following steps to follow to ensure your ILM efficiency:

Stage 1. Preliminary

1) Determine whether your company’s data is answerable to regulatory demands.

2) Determine whether your company uses its storage in an optimal manner.

Stage 2. Identifying file type, users accessing the data, and keywords used.

1) Make a list of regulatory requirements that may apply. Get this from your legal department or compliance office.

2) Define stakeholder needs. You must understand what users need and what they consider to be nonnegotiable.

3) Third, verify the data life cycles. Verify the value change for each life cycle with at least two other sources, a second source within the department that owns the data (if that is politically impossible, raise the issue through management), and someone familiar with the potential legal issues.

4) Define success criteria and get them widely accepted.

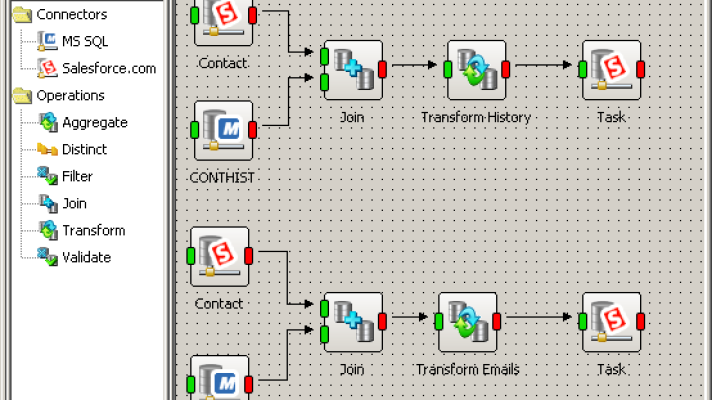

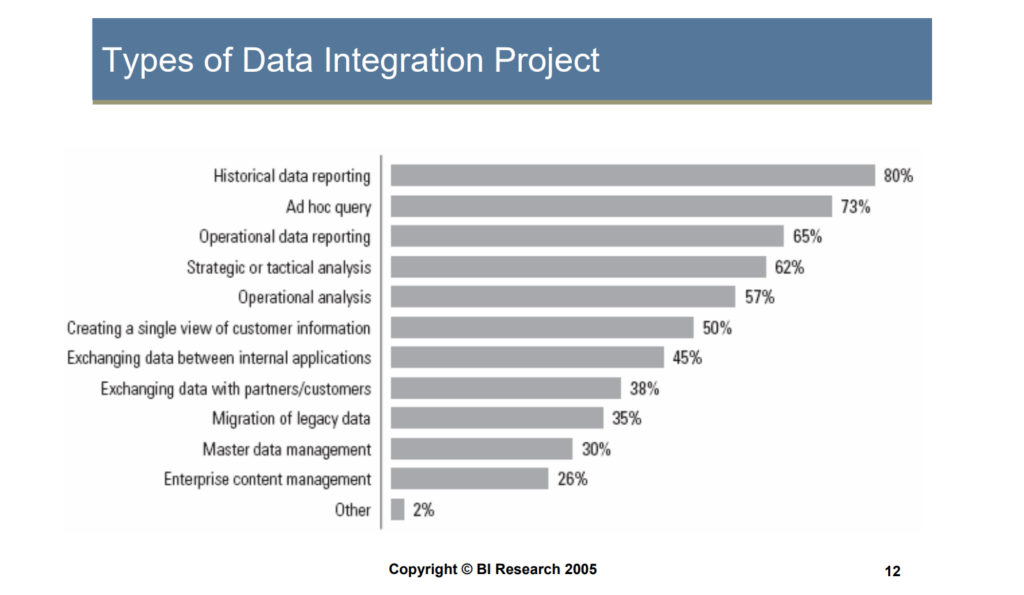

The most popular scenarios for data integration (image credit)

The most popular scenarios for data integration (image credit)

Stage 3. Classification (aligning your stakeholders’ business requirements to the IT infrastructure).

0) Identifying the business value of each type of data object—i.e., understanding three things: what kind of data you are dealing with, who will be using it, and what its keywords are.

1) Create classification rules.

2) Build retention policies.

When you engage with the vendors, make sure to understand their products’ capabilities in each of the following areas:

- Ability to tag files as compliant for each required regulation.

- Data classification.

- Data deduplication.

- Disaster recovery and business continuity.

- Discovery of compliance-answerable files across Windows, Linux, UNIX, and any other operating systems you may have.

- Fully automated file migration based on locally set migration policies.

- Integration with backup, recovery, and archiving solutions already on-site.

- Searching (both tag-based and other metadata-based).

- Security (access control, identity management, and encryption).

- Security (antivirus).

- Set policies to move files to appropriate storage devices (content-addressed storage, WORM tape).

- Finding and tagging outdated, unused and unwanted files for demotion to a lower storage tier.

- Tracking access to and lineage of objects through their life cycle.

Finally, when you know your vendor, you can look for solutions to automate the needed processes and phase in.

Tools and services

Some more tips can be applied to the components of the integration evironment deployed between the sources—such as ETL or EAI.

Don’t let expenses frighten you.

In today’s enterprises, most data integration projects never get built. Why? Because most companies consider the return on investment (ROI) on the small projects simply too low to justify bringing in expensive middleware. Yeah, so you have your customer data in two sources and want to integrate (or synchronize). But then you think “Hey, it costs too much, I might as well leave everything as it is. It worked up till now, it’ll work just as well in the future.” Then, after a while, you find yourself lost between the systems and the data they contain, trying to figure out which information is more up-to-date and accurate? Guess what? You’re losing again.

Do consider open-source software, if ROI is an issue.

With open-source data integration tools, you could have your pie and eat it, too. Open source can offer a cost-effective visual data integration solutions to the companies that previously found proprietary data integration, ETL, and EAI tools expensive and complicated. Not having to pay license fees for BI and data integration software should make companies previously scared of insufficient ROI return to the data integration market.

With these recommendations at hand, one can ensure a more efficient data integration strategy.

Further reading

- Ignoring Security Harms Data Management

- Gartner Suggests Rationalizing Data Integration Tools to Cut Costs

- Reducing ETL and Data Integration Costs by 80% with Open Source