How to Deploy Cloud Foundry v2 to AWS by Using Vagrant

Recently, we published an article on the Cloud Foundry blog in which we explained how to install Cloud Foundry with Vagrant. Although BOSH is suggested as the official method of setting up a system, the way described in the article is easier and faster. This blog post found on the ActiveState blog adds some more details to the subject. Don’t skip the comments made by our Argentinian team, in which we suggest the ways of automating some installation tasks.

Read the full article “How to Deploy Cloud Foundry v2 to AWS via Vagrant” to learn the details.

In this post, I’m going to quickly run through how I got up and running with Cloud Foundry v2. These notes are based on my colleague’s instructions, who is in the process of giving Cloud Foundry v2’s tires a good kicking.

The easiest way to deploy Cloud Foundry version 2 (a.k.a “ng” or “next generation”) seems to be via Vagrant. The official way is via BOSH, but we have created a method which makes it much easier to spin up a single instance of Cloud Foundry v2 on Amazon EC2. We found with BOSH we needed 14 instances to get up and running and it took much longer.

Install the installer

You start by git-cloning the cf-vagrant-installer repository from GitHub.

1 2 3 | $ git clone https://github.com/Altoros/cf-vagrant-installer $ cd cf-vagrant-installer $ cat README.md |

As you will see in the README.md, there are a few vagrant dependencies, the first of which is Vagrant itself.

Install Vagrant

If you do not have Vagrant installed, you can install it from http://downloads.vagrantup.com/. I installed the .dmg for my Mac, which was pretty straightforward.

Install Vagrant plug-ins

The Vagrant plug-ins required (if they have not changed) were vagrant-berkshelf, which adds Berkshelf integration to the Chef provisioners, vagrant-omnibus, which ensures the desired version of Chef is installed via the platform-specific Omnibus packages, and vagrant-aws, which adds an AWS provider to Vagrant, allowing Vagrant to control and provision machines in EC2.

Installation of these plug-ins could not be simpler:

1 2 3 | $ vagrant plugin install vagrant-berkshelf $ vagrant plugin install vagrant-omnibus $ vagrant plugin install vagrant-aws |

Run the bootstrap

Next, make sure we are in the cf-vagrant-installer (which we cloned above) directory and run the rake command to download all the Cloud Foundry components.

1 | $ rake host:bootstrap |

The output of this rake command will look something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | (in /Users/phil/src/cfv2/cf-vagrant-installer) ==> Init Git submodules Submodule 'cloud_controller_ng' (https://github.com/cloudfoundry/cloud_controller_ng.git) registered for path 'cloud_controller_ng' Submodule 'dea_ng' (https://github.com/cloudfoundry-attic/dea_ng.git) registered for path 'dea_ng' Submodule 'gorouter' (https://github.com/cloudfoundry/gorouter.git) registered for path 'gorouter' Submodule 'health_manager' (https://github.com/cloudfoundry/health_manager.git) registered for path 'health_manager' Submodule 'uaa' (https://github.com/cloudfoundry/uaa.git) registered for path 'uaa' Submodule 'warden' (git://github.com/cloudfoundry/warden.git) registered for path 'warden' Cloning into 'cloud_controller_ng'... remote: Counting objects: 13057, done. remote: Compressing objects: 100% (7357/7357), done. remote: Total 13057 (delta 7851), reused 10513 (delta 5512) Receiving objects: 100% (13057/13057), 4.07 MiB | 1.34 MiB/s, done. Resolving deltas: 100% (7851/7851), done. Submodule path 'cloud_controller_ng': checked out '4b9208900c54181d539c9cc93519277d7c2702b5' Submodule 'vendor/errors' (https://github.com/cloudfoundry/errors.git) registered for path 'vendor/errors' Cloning into 'vendor/errors'... remote: Counting objects: 58, done. remote: Compressing objects: 100% (45/45), done. ... (truncated) ... |

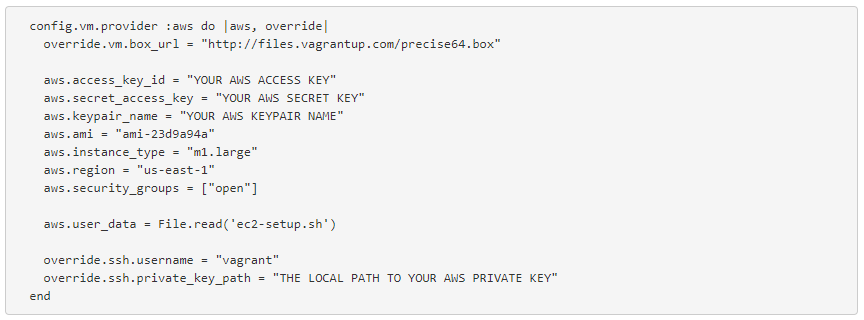

Set up AWS credentials

Next, you will need to edit the Vagrantfile:

1 | $ vim Vagrantfile |

Add the following section directly above the config.vm.provider :vmware_fusion line:

config.vm.provider :aws do |aws, override|

override.vm.box_url = "http://files.vagrantup.com/precise64.box"

aws.access_key_id = "YOUR AWS ACCESS KEY"

aws.secret_access_key = "YOUR AWS SECRET KEY"

aws.keypair_name = "YOUR AWS KEYPAIR NAME"

aws.ami = "ami-23d9a94a"

aws.instance_type = "m1.large"

aws.region = "us-east-1"

aws.security_groups = ["open"]

aws.user_data = File.read('ec2-setup.sh')

override.ssh.username = "vagrant"

override.ssh.private_key_path = "THE LOCAL PATH TO YOUR AWS PRIVATE KEY"

end

Then replace "YOUR AWS ACCESS KEY", "YOUR AWS SECRET KEY", and "YOUR AWS KEYPAIR NAME" with your own AWS credentials.

An open security group

The AWS security group used in the above example is one called “open.” This is just one with all open ports. You will need to create it if you do not have it already. You can do this through the AWS console.

Create an EC2 set-up script

Next, you’ll need to create an ec2-setup.sh file directly in the cf-vagrant-installer directory. It should look exactly like the following:

1 2 3 4 5 6 7 | #!/bin/bash -ex usermod -l vagrant ubuntu groupmod -n vagrant ubuntu usermod -d /home/vagrant -m vagrant mv /etc/sudoers.d/90-cloudimg-ubuntu /etc/sudoers.d/90-cloudimg-vagrant perl -pi -e "s/ubuntu/vagrant/g;" /etc/sudoers.d/90-cloudimg-vagrant |

Build the EC2 instance running CFv2

Finally, run "vagrant up --provider=aws" and your instance will be built:

1 | $ vagrant up --provider=aws |

My (truncated) output looked something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 | Bringing machine 'cf-install' up with 'aws' provider... [Berkshelf] Updating Vagrant's berkshelf: '/Users/phil/.berkshelf/cf-install/vagrant/berkshelf-20130717-81754-5vjx63-cf-install' [Berkshelf] Using apt (1.10.0) [Berkshelf] Using git (2.5.2) [Berkshelf] Using sqlite (1.0.0) [Berkshelf] Using mysql (3.0.2) [Berkshelf] Using postgresql (3.0.2) [Berkshelf] Using chef-golang (1.0.1) [Berkshelf] Using java (1.12.0) [Berkshelf] Using ruby_build (0.8.0) [Berkshelf] Installing rbenv (0.7.3) from git: 'git://github.com/fnichol/chef-rbenv.git' with branch: 'master' at ref: 'e10f98d5fd07bdb8d212ebf42160b65c39036b90' [Berkshelf] Using rbenv-alias (0.0.0) at './chef/rbenv-alias' [Berkshelf] Using rbenv-sudo (0.0.1) at './chef/rbenv-sudo' [Berkshelf] Using cloudfoundry (0.0.0) at './chef/cloudfoundry' [Berkshelf] Using dmg (1.1.0) [Berkshelf] Using build-essential (1.4.0) [Berkshelf] Using yum (2.3.0) [Berkshelf] Using windows (1.10.0) [Berkshelf] Using chef_handler (1.1.4) [Berkshelf] Using runit (1.1.6) [Berkshelf] Using openssl (1.0.2) [cf-install] Warning! The AWS provider doesn't support any of the Vagrant high-level network configurations (`config.vm.network`). They will be silently ignored. [cf-install] Launching an instance with the following settings... [cf-install] -- Type: m1.large [cf-install] -- AMI: ami-23d9a94a [cf-install] -- Region: us-east-1 [cf-install] -- Security Groups: ["open"] [cf-install] Waiting for instance to become "ready"... [cf-install] Waiting for SSH to become available... [cf-install] Machine is booted and ready for use! [cf-install] Rsyncing folder: /Users/phil/src/cfv2/cf-vagrant-installer/ => /vagrant [cf-install] Rsyncing folder: /Users/phil/.berkshelf/cf-install/vagrant/berkshelf-20130717-81754-5vjx63-cf-install/ => /tmp/vagrant-chef-1/chef-solo-1/cookbooks [cf-install] Installing Chef 11.4.0 Omnibus package... [cf-install] Running provisioner: chef_solo... Generating chef JSON and uploading... Running chef-solo... stdin: is not a tty [2013-07-17T19:43:22+00:00] INFO: *** Chef 11.4.0 *** [2013-07-17T19:43:23+00:00] INFO: Setting the run_list to ["recipe[cloudfoundry::vagrant-provision-start]", "recipe[apt::default]", "recipe[git]", "recipe[chef-golang]", "recipe[ruby_build]", "recipe[rbenv::user]", "recipe[java language="::openjdk"][/java]", "recipe[sqlite]", "recipe[mysql::server]", "recipe[postgresql::server]", "recipe[rbenv-alias]", "recipe[rbenv-sudo]", "recipe[cloudfoundry::warden]", "recipe[cloudfoundry::dea]", "recipe[cloudfoundry::uaa]", "recipe[cloudfoundry::cf_bootstrap]", "recipe[cloudfoundry::vagrant-provision-end]"] from JSON [2013-07-17T19:43:23+00:00] INFO: Run List is [recipe[cloudfoundry::vagrant-provision-start], recipe[apt::default], recipe[git], recipe[chef-golang], recipe[ruby_build], recipe[rbenv::user], recipe[java language="::openjdk"][/java], recipe[sqlite], recipe[mysql::server], recipe[postgresql::server], recipe[rbenv-alias], recipe[rbenv-sudo], recipe[cloudfoundry::warden], recipe[cloudfoundry::dea], recipe[cloudfoundry::uaa], recipe[cloudfoundry::cf_bootstrap], recipe[cloudfoundry::vagrant-provision-end]] [2013-07-17T19:43:23+00:00] INFO: Run List expands to [cloudfoundry::vagrant-provision-start, apt::default, git, chef-golang, ruby_build, rbenv::user, java::openjdk, sqlite, mysql::server, postgresql::server, rbenv-alias, rbenv-sudo, cloudfoundry::warden, cloudfoundry::dea, cloudfoundry::uaa, cloudfoundry::cf_bootstrap, cloudfoundry::vagrant-provision-end] [2013-07-17T19:43:23+00:00] INFO: Starting Chef Run for ip-10-77-71-207.ec2.internal [2013-07-17T19:43:23+00:00] INFO: Running start handlers [2013-07-17T19:43:23+00:00] INFO: Start handlers complete. [2013-07-17T19:43:24+00:00] INFO: AptPreference light-weight provider already initialized -- overriding! ... (truncated) ... [2013-07-17T19:58:50+00:00] INFO: Processing package[zip] action install (cloudfoundry::dea line 9) [2013-07-17T19:58:55+00:00] INFO: Processing package[unzip] action install (cloudfoundry::dea line 13) [2013-07-17T19:58:55+00:00] INFO: Processing package[maven] action install (cloudfoundry::uaa line 1) [2013-07-17T19:59:38+00:00] INFO: Processing execute[run rake cf:bootstrap] action run (cloudfoundry::cf_bootstrap line 3) [2013-07-17T20:05:35+00:00] INFO: execute[run rake cf:bootstrap] ran successfully [2013-07-17T20:05:35+00:00] INFO: Processing bash[emit provision complete] action run (cloudfoundry::vagrant-provision-end line 2) [2013-07-17T20:05:35+00:00] INFO: bash[emit provision complete] ran successfully [2013-07-17T20:05:35+00:00] INFO: Chef Run complete in 1332.027903781 seconds [2013-07-17T20:05:35+00:00] INFO: Running report handlers [2013-07-17T20:05:35+00:00] INFO: Report handlers complete |

We can now log into our new EC2 instance, which is running Cloud Foundry v2:

1 | $ vagrant ssh |

Note: all commands that follow are intended to be run on the EC2 instance.

Push an app

First, we must initialize the Cloud Foundry v2 command-line interface with the following command:

1 2 | $ cd /vagrant $ rake cf:init_cf_cli |

Here is the output of that command:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | ==> Initializing cf CLI Setting target to http://127.0.0.1:8181... OK target: http://127.0.0.1:8181 Authenticating... OK There are no spaces. You may want to create one with create-space. Creating organization myorg... OK Switching to organization myorg... OK There are no spaces. You may want to create one with create-space. Creating space myspace... OK Adding you as a manager... OK Adding you as a developer... OK Space created! Use `cf switch-space myspace` to target it. Switching to space myspace... OK Target Information (where will apps be pushed): CF instance: http://127.0.0.1:8181 (API version: 2) user: admin target app space: myspace (org: myorg) |

Now you can deploy one of the test apps. We will use a Node.js “Hello World” app:

1 2 | $ cd test-apps/hello-node $ cf push |

We see the output:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | Warning: url is not a valid manifest attribute. Please remove this attribute from your manifest to get rid of this warning Using manifest file manifest.yml Creating hello-node... OK 1: hello-node 2: none Subdomain> hello-node 1: vcap.me 2: none Domain> 1 Creating route hello-node.vcap.me... OK Binding hello-node.vcap.me to hello-node... OK Uploading hello-node... OK Preparing to start hello-node... OK Checking status of app 'hello-node'........................... 0 of 1 instances running (1 starting) 0 of 1 instances running (1 starting) 1 of 1 instances running (1 running) Push successful! App 'hello-node' available at http://hello-node.vcap.me |

Cloud Foundry v2 is running on localhost on our EC2 instance, so our app is not accessible from our web-browser, but we can test the app exists using curl from the EC2 instance:

1 | $ curl http://hello-node.vcap.me/ |

Here is what is output by curl:

1 | Hello from Cloud Foundry |

Delete the app

To delete the app, you can use:

1 | $ cf delete |

The following output is seen:

1 2 3 4 5 6 | Warning: url is not a valid manifest attribute. Please remove this attribute from your manifest to get rid of this warning Using manifest file manifest.yml Really delete hello-node?> y Deleting hello-node... OK |

Inside out

From the notes I was given:

Now, to expose apps externally, it gets trickier. First, you’ll need to provision an elastic IP in the AWS console and attach it to the EC2 instance that’s running the cf v2 install. Then, you’ll need to set up a wildcard DNS record to point to that IP (*.domain and domain should point to that IP). xip.io might work here, but I’m not familiar enough with it to know for sure.

xip.io is actually perfect for this. All I need is my external IP, which was 50.19.50.63, and I append ".xip.io", which gives me "50.19.50.63.xip.io" as well as wildcard "*.50.19.50.63.xip.io" for the Cloud Foundry API and any apps I deploy. This is a zero-configuration service. The IP that you want to resolve to is included in the hostname you create and the DNS service simply returns you the IP. This means you can have a valid globally resolvable DNS hostname instantly.

I can also get a simpler hostname by checking the DNS record of this hostname, which is actually just a CNAME.

1 | $ host 50.19.50.63.xip.io |

Which outputs:

1 2 3 4 | 50.19.50.63.xip.io is an alias for hj8raq.xip.io. hj8raq.xip.io has address 50.19.50.63 Host hj8raq.xip.io not found: 3(NXDOMAIN) Host hj8raq.xip.io not found: 3(NXDOMAIN) |

So, I can use hj8raq.xip.io instead, since it is shorter and I just want to use it temporarily.

Updating more config

Since we now have an external domain name, not just localhost, we need to update some configuration files within the custom_config_files directory.

1 | $ cd /vagrant/custom_config_files |

Assuming you are running under the domain "yourdomain" (or "hj8raq.xip.io" in my case), you should edit the cloud_controller.yml as follows:

1 | $ (cd cloud_controller_ng; vim cloud_controller.yml) |

- change

external_domaintoapi.yourdomain - change

system_domaintoyourdomain - change

app_domainstoyourdomain - change

uaa:urltohttp://yourdomain:8080/uaa

Next, edit the DEA configuration.

1 | $ (cd dea_ng; vim dea.yml) |

- change

domaintoyourdomain

And, finally, the configuration of the Health Manager:

1 | $ (cd health_manager; vim health_manager.yml) |

- change

bulk_api:hosttohttp://api.yourdomain:8181

Router-registry bug

There was a small bug on my AWS deployment that may have been fixed. This was related to a incompatibility with the JSON between the Cloud Controller and the Router when registering the API endpoint with the router. Here’s the fix:

1 2 | $ cd /vagrant/cloud_controller_ng/lib/cloud_controller $ vim message_bus.rb |

Then, change the line:

:uris => config[:external_domain],

To this:

:uris => [config[:external_domain]],

This will make :uris an array, not a string. Probably, better to fix this in the gorouter, but this is quicker for now.

Restart CC DB

Now we need to reset the Cloud Controller database.

1 2 | $ cd /vagrant/ $ rake cf:bootstrap |

Finally, reboot the machine.

1 | $ sudo reboot |

When the machine comes back up, we can ssh back into it:

1 | $ vagrant ssh |

And run the ./start.sh command to start Cloud Foundry components.

1 2 | $ cd /vagrant $ ./start.sh |

Now, Cloud Foundry v2 should be running with your externally accessible endpoint.

Related video

In this meetup session, Gastón Ramos and Alan Morán of Altoros Argentina deliver an overview of Cloud Foundry and present CF Vagrant Installer to the audience.