Gartner: Companies Lose Millions Due to Poor Data Quality

Siloed data

According to Ted Friedman, an analyst from Gartner, though many organizations have at last acknowledged the importance of customer data quality, only few of them have actually implemented associated tools or relevant initiatives throughout the enterprise. Moreover, even those organizations that actually use data quality tools still do not adopt them enterprise-wide.

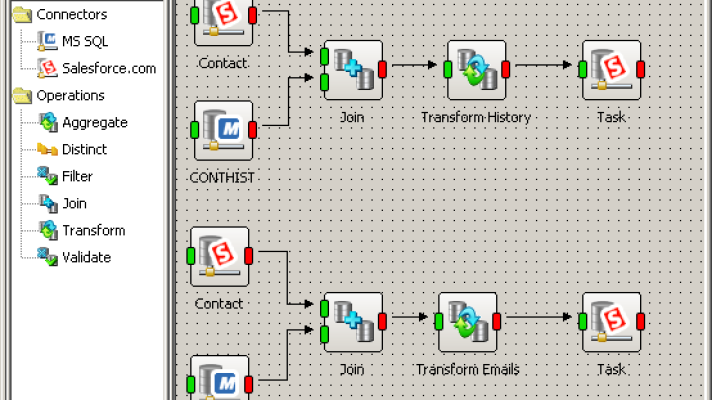

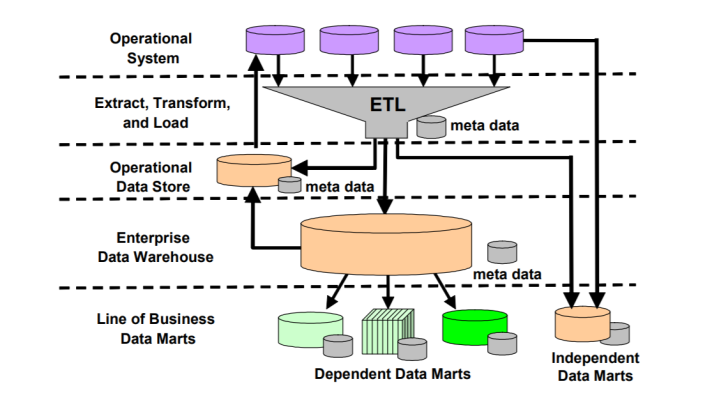

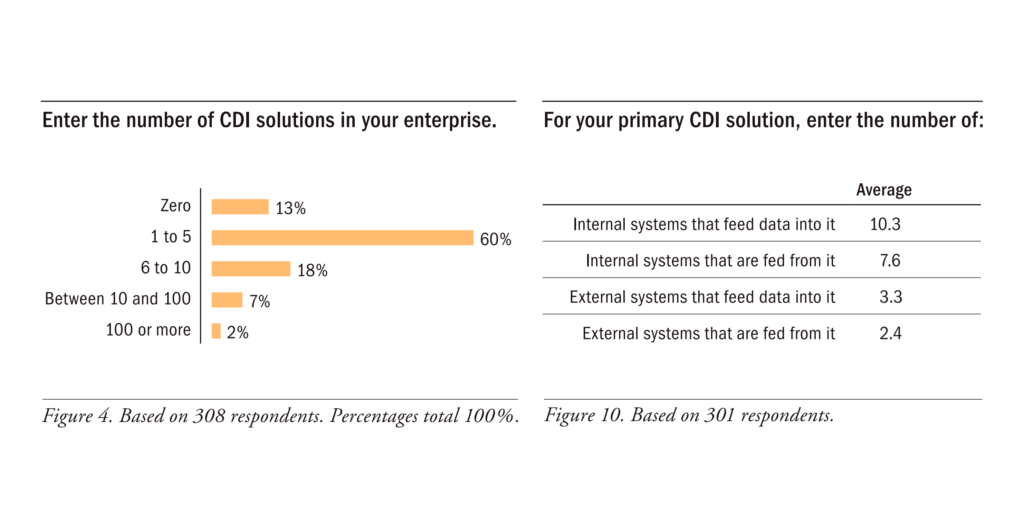

Among the reasons some analysts name the fact that most companies collect and store customer data in numerous data sources spread throughout the organization (“silos“) with no way to connect them. Organizations fail to deploy data quality tools enterprise-wide due to lacking a single view of the customer through a master data management (MDM) or customer data integration (CDI). Some may have up to 10 different customer databases with no single schema for collecting customer data.

These findings are smilar to what TDWI discovered last year.

Source: TDWI, Q4 2008

Source: TDWI, Q4 2008As an effect, poor or siloed data lead to missed cross-sell and up-sell opportunities and can even deter potential customers who usually expect personalized interactions. To address these problems, companies need to start thinking broadly about data quality—in the context of enterprise information management and MDM/CDI initiatives.

Experts advise companies with simple data quality needs to start utilizing the tools embedded in their existing applications. However, most organizations will need to invest in more specialized tools for sophisticated tasks, such as data parsing and standardization.

Improvements to avoid loses

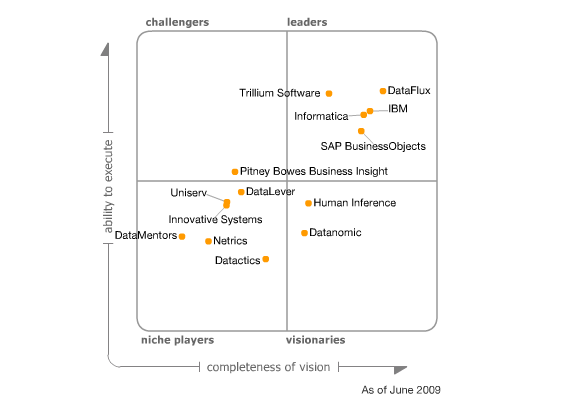

Though the adoption of data quality tools increased recently, organizations still lose $8.2 million annually due to poor data quality, according to a Gartner report published in August 2009. Moreover, achieving comprehensive data quality processes is something most organizations are very far from. The reason is that it is mainly IT staff who utilizes data tools, because the products are complex and difficult to understand for non-IT users, resulting in slow adoption.

Gartner provided some recommendations called to help organizations improve data quality. First of all, vendors should make data quality tools simpler to use—so that not only IT people, but business users could adopt them.

“In particular, providing data profiling and visualization functionality (reporting and dashboarding of data quality metrics and exceptions) to a broader set of business users would increase awareness of data quality issues and facilitate data stewardship activities,” Ted Friedman, an analyst, mentioned in his report.

The advice for adopters is to consider pervasive data quality controls throughout organization’s infrastructure and investments in technologies applying data quality rules to data in terms of capturing and maintenance, as well as downstream.

Though the adoption of customer data quality tools enterprise-wide is still lagging, the experts predict it will shortly gain momentum as more and more companies recognize the advantage it can provide over competitors.

Further reading

- Data Quality: Upstream or Downstream?

- Gartner Suggests Rationalizing Data Integration Tools to Cut Costs

- Poor Data Quality Can Have Long-Term Effects