Event-Driven Microservices with OpenWhisk: Comparison to Traditional Models

A new class of services and apps

Animesh Singh

At a recent meetup in Silicon Valley, Animesh Singh of IBM noted that we’re now in a world of cloud-native apps, written for use in cloud computing environments.

According to Animesh, some key things to keep in mind when writing cloud-native apps include:

- Clean contract with underlying OS to ensure maximum portability

- Scale elastically without significant changes to tooling, architecture, or development practices

- Resilient to inevitable failures in the infrastructure and application

- Instrumented to provide both technical and business insight

- Utilize cloud services (e.g., storage, queuing, and caching)

- Rapid and repeatable deployments to maximize agility

- Automated setup to minimize time and cost for new developers

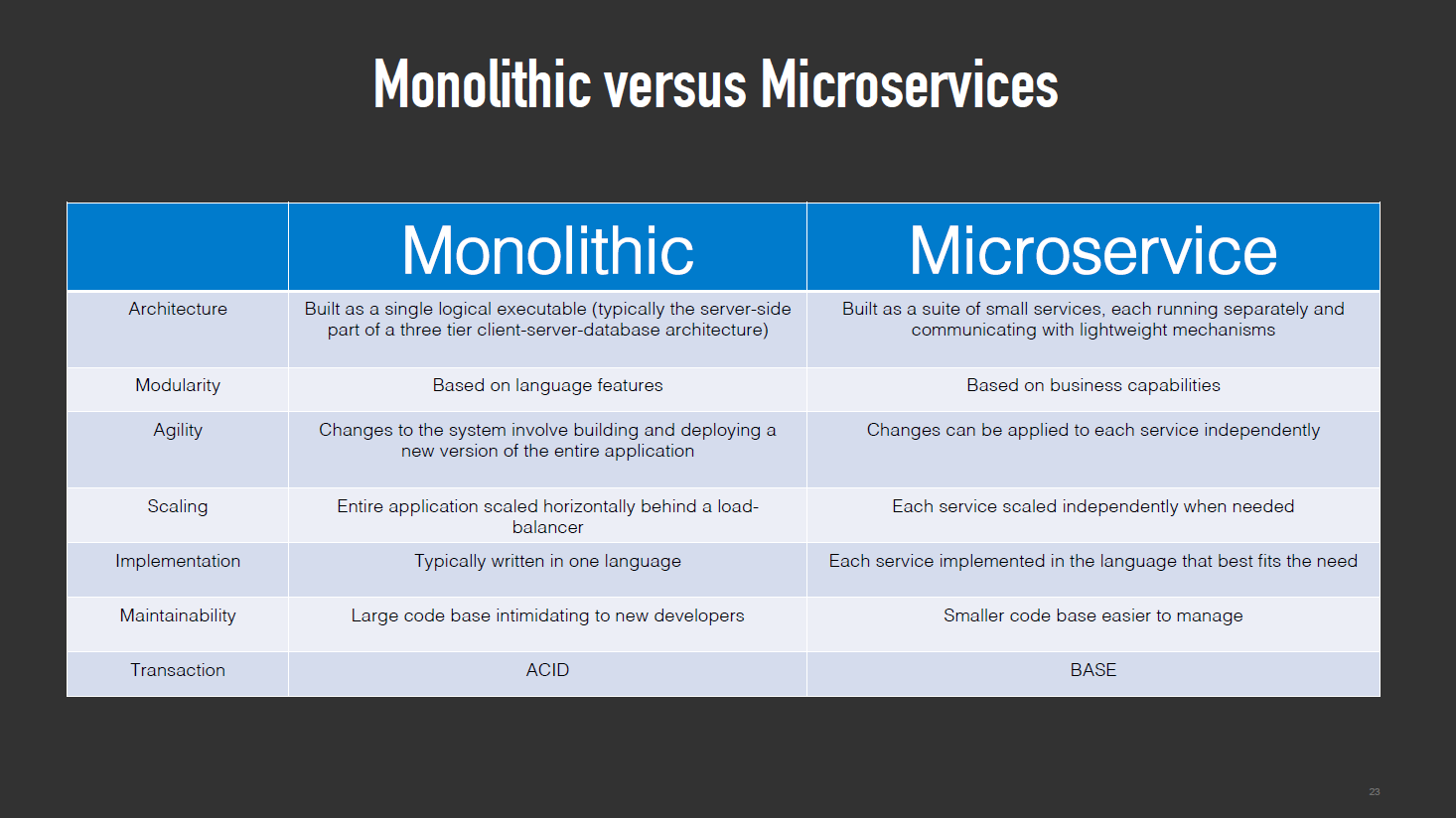

Animesh pointed out the increasingly accepted reasons for the microservices approach—presenting a table of some key differences between monolithic apps and microservices:

Animesh also mentioned some issues associated with monolithic apps. For example, scaling them means “you’re actually creating several instances of the same app, so if you have a problem with one [instance], they will all likely crash.”

Event-driven vs. traditional

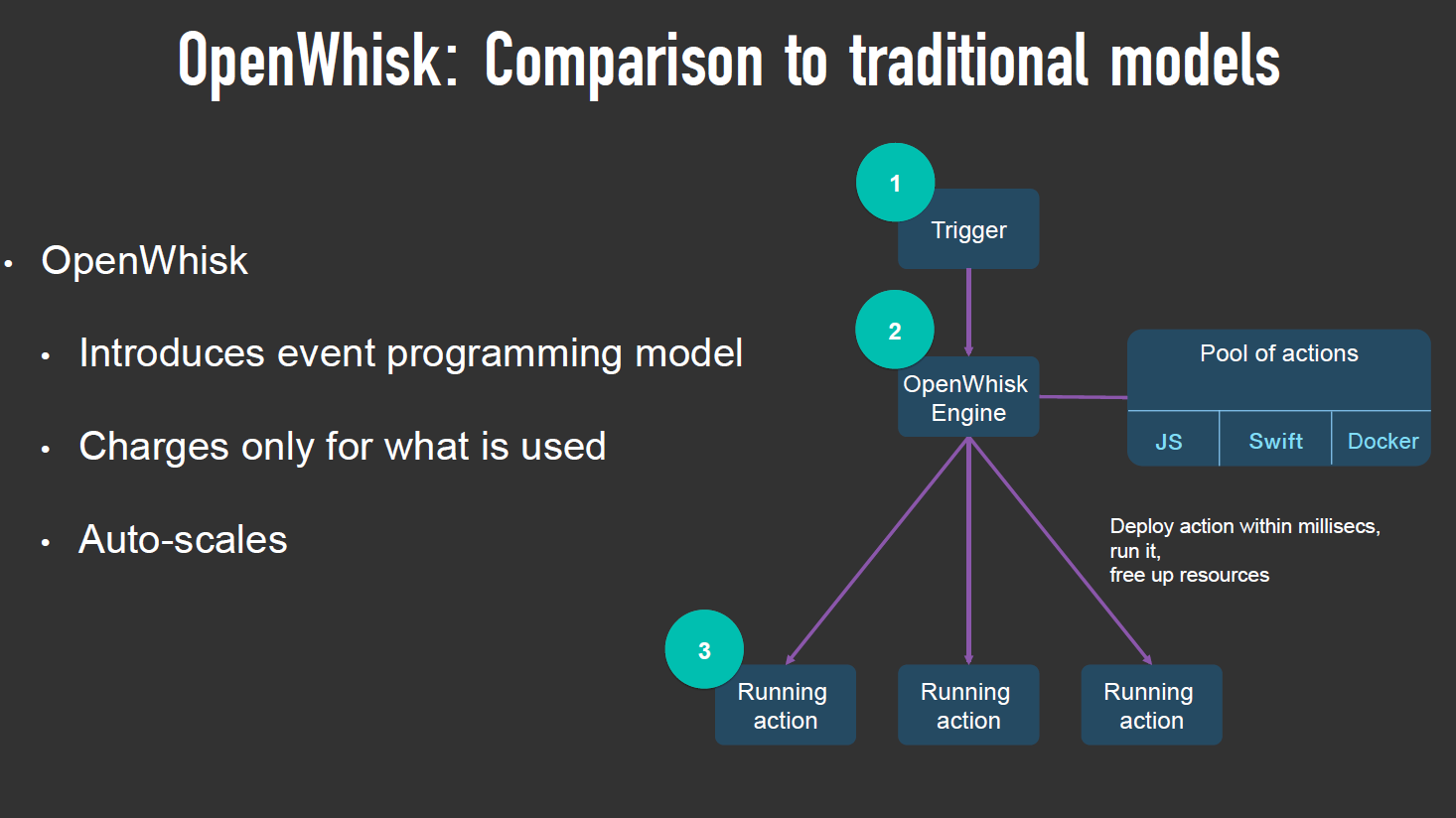

During the presentation, Animesh stressed that event-driven models are not limited to single, simple events. Rather, think of “a class of events that can happen,” as Animesh said. So, he then got to the heart of the matter: how OpenWhisk can address the challenges when creating event-driven microservices.

“OpenWhisk targets a new class of event-driven apps and services,” Animesh noted, as he took attendees through a point-by-point case for use of this new technology. OpenWhisk features a serverless architecture designed to chain microservices and to be highly scalable.

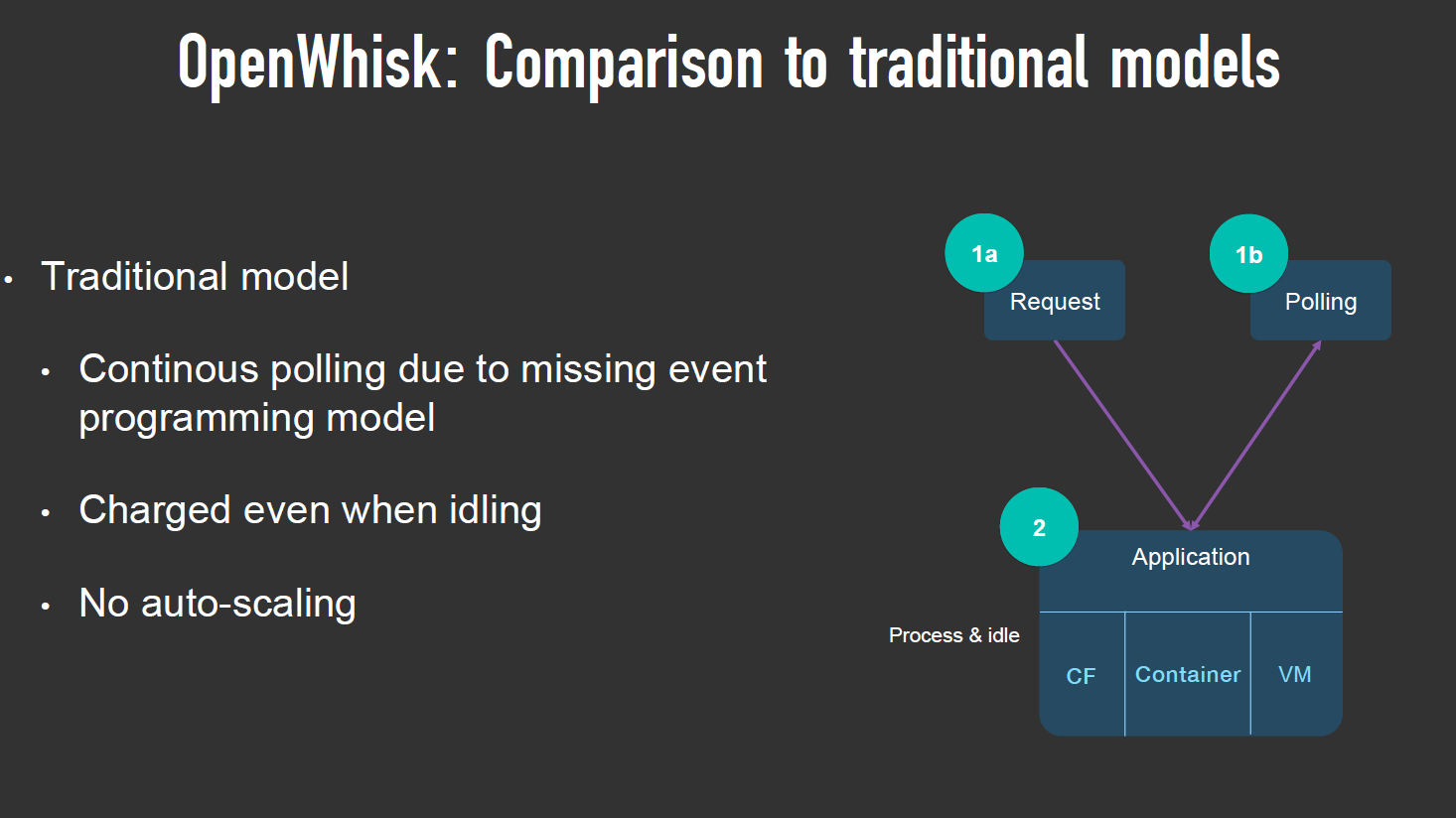

“OpenWhisk provides a distributed compute service to execute application logic in response to events,” Animesh said. A key to OpenWhisk—particularly when public-cloud providers bill by the millisecond—is its ability to run microservices only when they’re actually doing something. Animesh provided two slides about this point, shown below.

In the traditional model, apps (and services) are deemed to be “on” continuously once they’ve been launched. In contrast, an IoT sensor is likely to be sending out a very short, periodic signal. With millisecond billing in place by the cloud provider, OpenWhisk is only using the time needed for that periodic signal. Thus, a microservice is charged only for the time it’s in actual use, which might be 50 to 100 milliseconds per minute.

In addition to flexible computation models, OpenWhisk enables developers to write code quickly and provides integrated container support. Finally, its users can build independent pieces of code independently, which makes it a perfect fit for creating microservices—written in different languages/technologies.

This recap by Animesh provides the explanation of “serverless” and explains how OpenWhisk fits the existing technology landscape. The platform can be found in this GitHub repository.

Want details? Watch the video!

Related slides

Related links

- Scaling Industrial Event-Driven Microservices with OpenWhisk

- Reducing Complexity of Software with Domain-Driven Design and Microservices

- IBM Bluemix OpenWhisk 101: Developing a Microservice

- How to Use OpenWhisk Docker Actions in IBM Bluemix