The Origins of Performance Issues when Integrating Data

How do DI performance issues occur?

It’s been written so much about the challenges of data integration (DI), so when an integration initiative at an enterprise starts working, at last, everybody sighs happily. However, they miss at least one thing to worry about—it’s performance.

What’s wrong with performance, you say. Well, according to David Linthicum, a computing and application integration expert, there are two main data integration performance issues:

- The first occurs due to the organic data growth. Being a common issue for both real-time and batch data integration model, it results in the system failing to get and deliver the needed data on time, providing undesirable latencies.

- The second issue is a result of bad data integration architecture. This happens when core data integration performance requirements have been poorly formulated or misunderstood. The mistake leads to wrong technology or approach selection.

What’s so dramatic about it? The answer is, because someone starts a data integration initiative to have a better view and access to data needed for business. If this information doesn’t appear in target systems when it is requested by a decision-maker, the goal of a data integration initiative is not achieved.

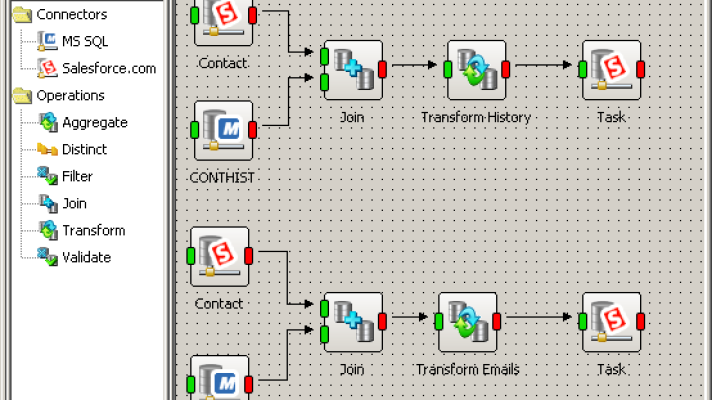

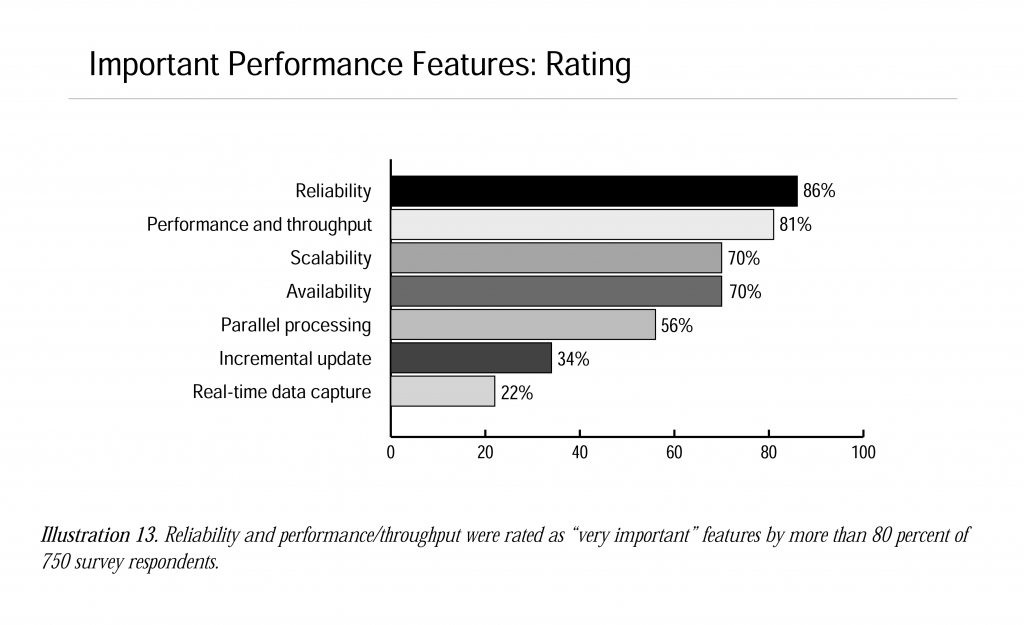

Reliability, latencies, and throughput are the top criteria for DI performance (image credit)

Reliability, latencies, and throughput are the top criteria for DI performance (image credit)

Areas of performance improvement

According to a TDWI report, “there are many variables that affect throughput—the complexity of transformations, the number of sources that must be integrated, the complexity of target data models, the capabilities of the target database,” etc.

Here are the main check points to see if a DI solution’s performance still fits your corporate standards.

Data transformation schemas deal with both data structure and content. If data mappings are not as well-organized as possible, then a single transformation may take twice as long. Mapping problems can cause small delays that add up. The solution to the transformation issue is to make sure that data maps are written as efficiently as possible. You can compare your data integration solution to the similar ones to understand if the data transformation runs with the required speed.

This is where performance testing can help a lot in finding bottlenecks and fixing them in the first place.

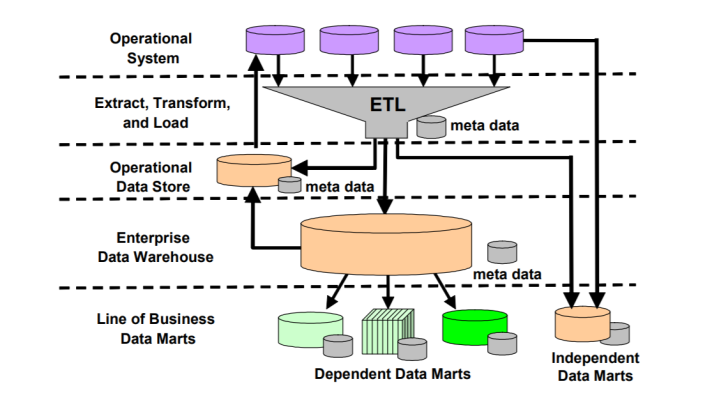

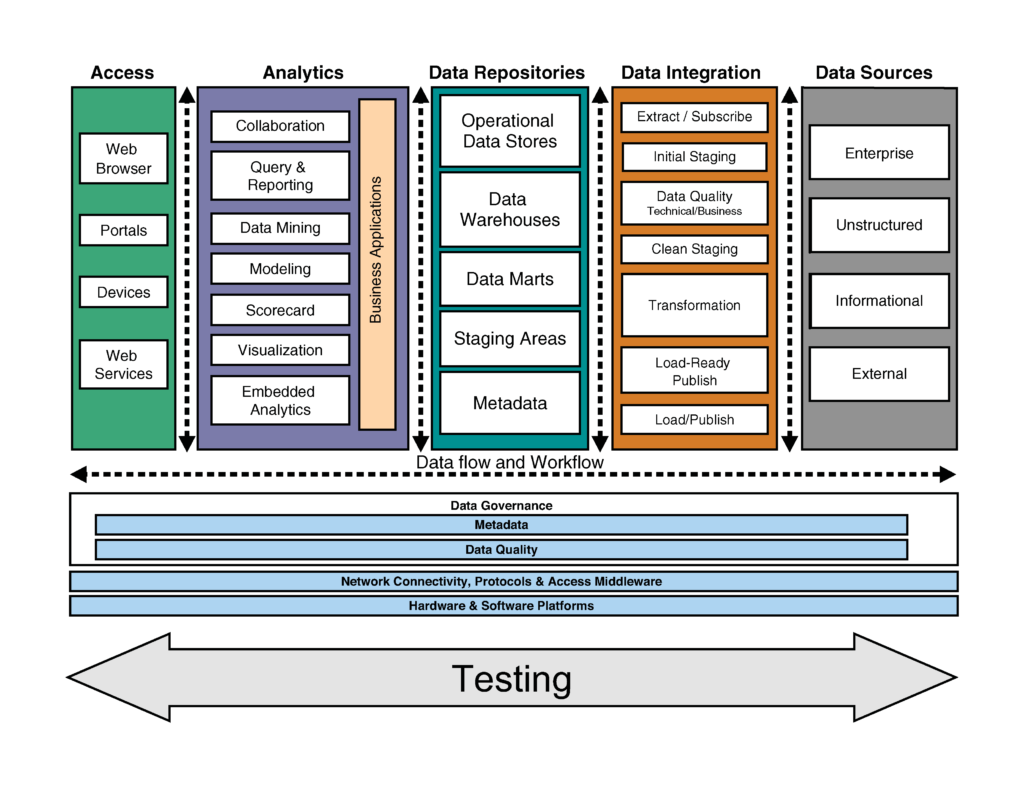

The areas of testing for a data warehouse project (image credit)

The areas of testing for a data warehouse project (image credit)Environment can also suspend your data integration processes. You have to make sure that the operating system, runtime, etc., work properly. “No matter how much horsepower a system possesses, if its runtime environment is unstable, overall performance suffers,” a TDWI report says.

Network bandwidth and traffic—in many cases, the performance is hindered not by the data integration tool itself, but by the size of the network you use. To avoid this issue, you need to calculate the predicted performance under various loads and make sure you use the fastest network available for your data integration budget.

Custom SQL queries, when used, are also the points where latencies may occur. Review them once in a while, test, and fix accordingly.

Source and target connections, as well as other endpoints, are prone to performance issues. Probably, you may need to optimize the performance of the systems being integrated, if they harm throughput.

Besides, explore the possibilities for app consolidation (to streamline workflows) or data federation (if this approach is enough for your business needs).

In case you use ODBC connections, read our article on how to improve their performance, as well.

Finally, there are many other areas of possible bottlenecks, but, if there’s only one thing to mention as a conclusion, caching of data you use frequently may help in many scenarios. Analyze your workflows and address redundancy.

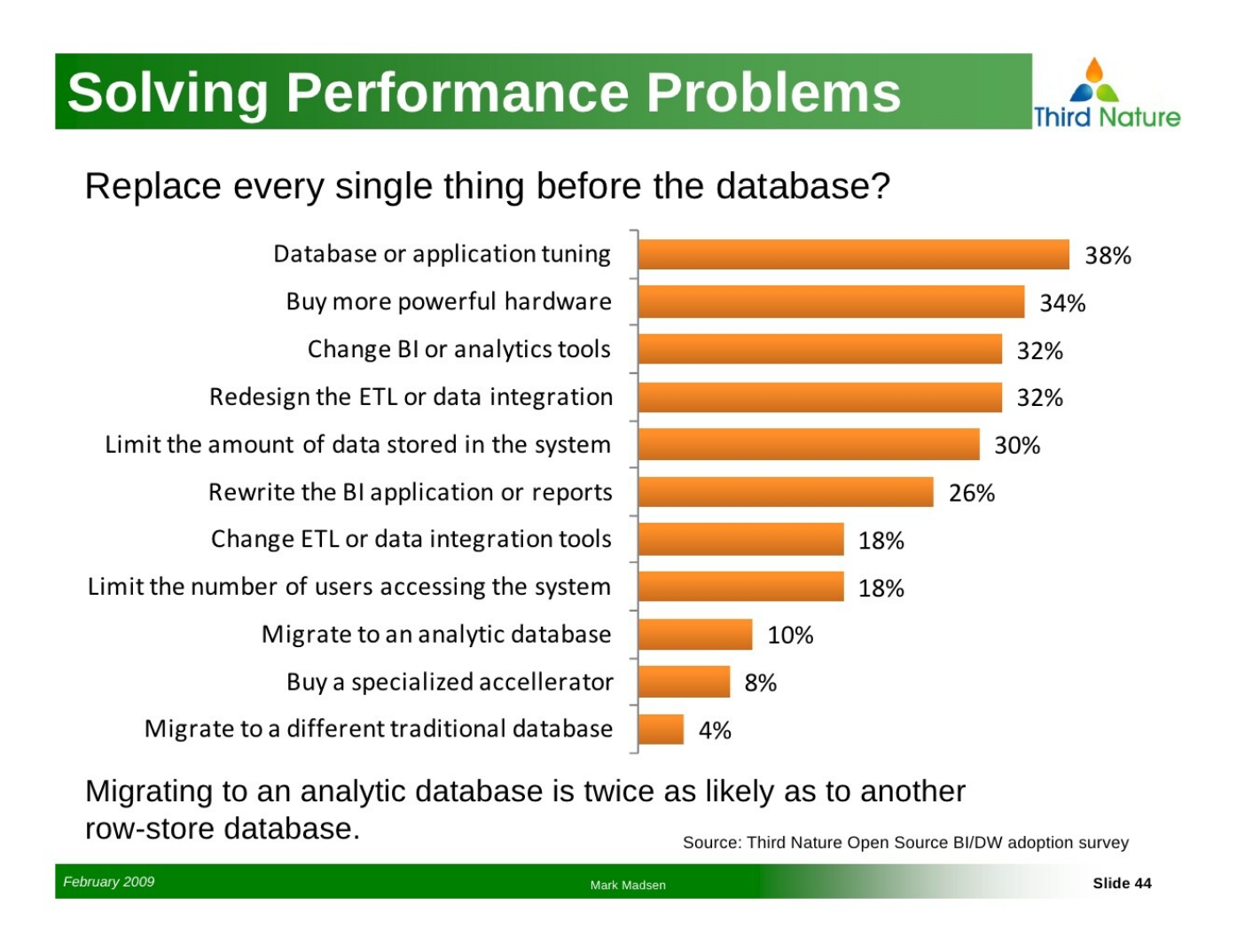

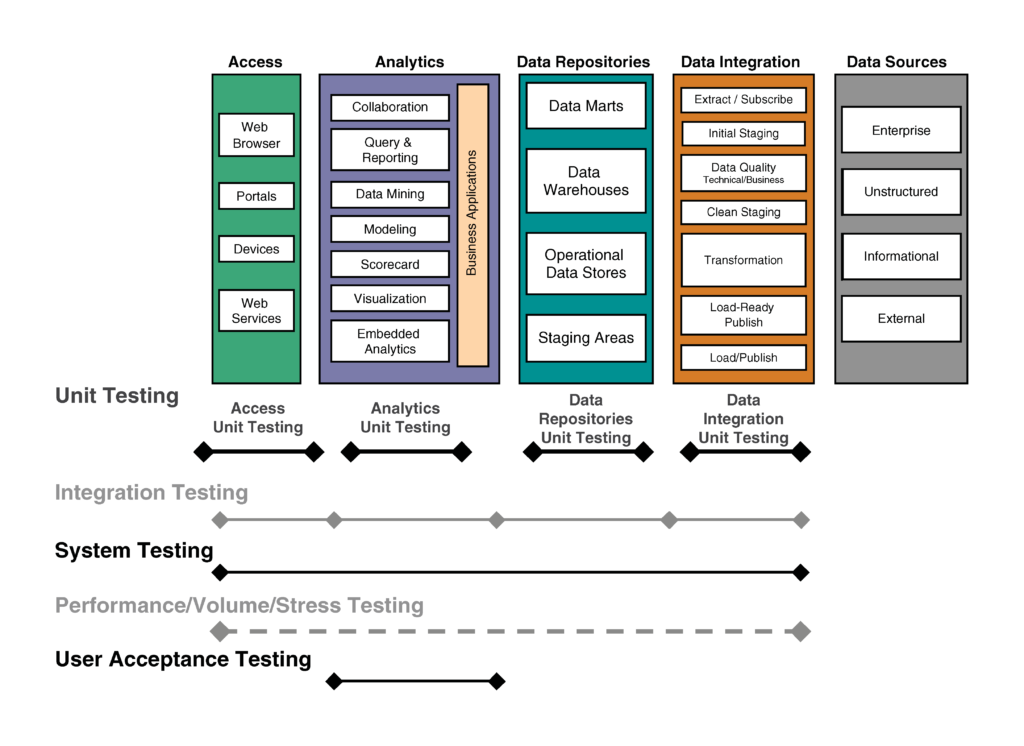

The types of testing for a data warehouse project (image credit)

The types of testing for a data warehouse project (image credit)Data integration solution reminds a car: it can run fast but become slow if not properly tuned and taken care of. As we become more dependent upon data integration, our ability to understand and optimize the performance issues will make a substantial difference.

So, plan data integration architecture properly and keep an eye on performance.

Further reading

- When Developing System Architectures, Think About Data Integration

- Steps to Consolidate Apps and Data to Cut Operational Costs

- Improving Database Integration with ODBC

with contributions from Alex Khizhniak and Katherine Vasilega.