How to Deploy Spring Boot Applications in Docker Containers

There is no one-size-fits-all strategy to build microservices. Watch the video to learn why Spring Boot and Docker are a great fit for this task.

Microservices-based architectures are complex and require equally sophisticated continuous integration/production environments. In his talk, Chris Richardson describes the Docker-/Jenkins CI-based deployment pipeline and production environment used by his microservices app.

Packaging makes applications more portable, but traditional ways of doing it are far from perfect. E.g., AMIs (Amazon Machine Images) are slow and heavy weight. Learn why Docker containers are better than AMIs and how you can use Docker to deploy your apps.

Deploying microservices is still challenging

According to Chris Richardson, while all the previous Spring releases only added minor changes to the framework, Spring Boot has simplified deployment of apps and introduced a lot of useful features, for instance, auto-configuration.

Spring Boot “basically gives you an opinionated way of building a Spring app and it makes it very easy to create standalone, production-ready apps.” It “analyzes the class path that you use to figure out what components are necessary and then finds them for you.” That is very convenient if you have to constantly re-define services.

Despite Spring Boot simplifying a lot of things, the reality of deploying a microservices app remains complex. If you package it with RPM, you may still have to deal with dependency version concerns, conflicting ports, etc. That’s where immutable infrastructure comes into play.

There are several ways to implement immutable infrastructure—e.g., package the app as an AMI. Unfortunately, AMIs are generally slow and not really portable. In addition, they can weigh gigabytes and are impractical if you want to run multiple VMs—not so good for a distributed system.

“This is what got me interested in Docker. Docker is an incredibly light-weight OS-level virtualization mechanism” that runs on top of Linux. The main advantage of Docker over AMI is speed:

- Docker images have a layered structure that facilitates sharing and caching between them.

- Although Docker images do contain the entire OS, the only thing that is ever booted is the Java executable, so the start-up is lightning-fast.

Packaging a Spring Boot app as a Docker image

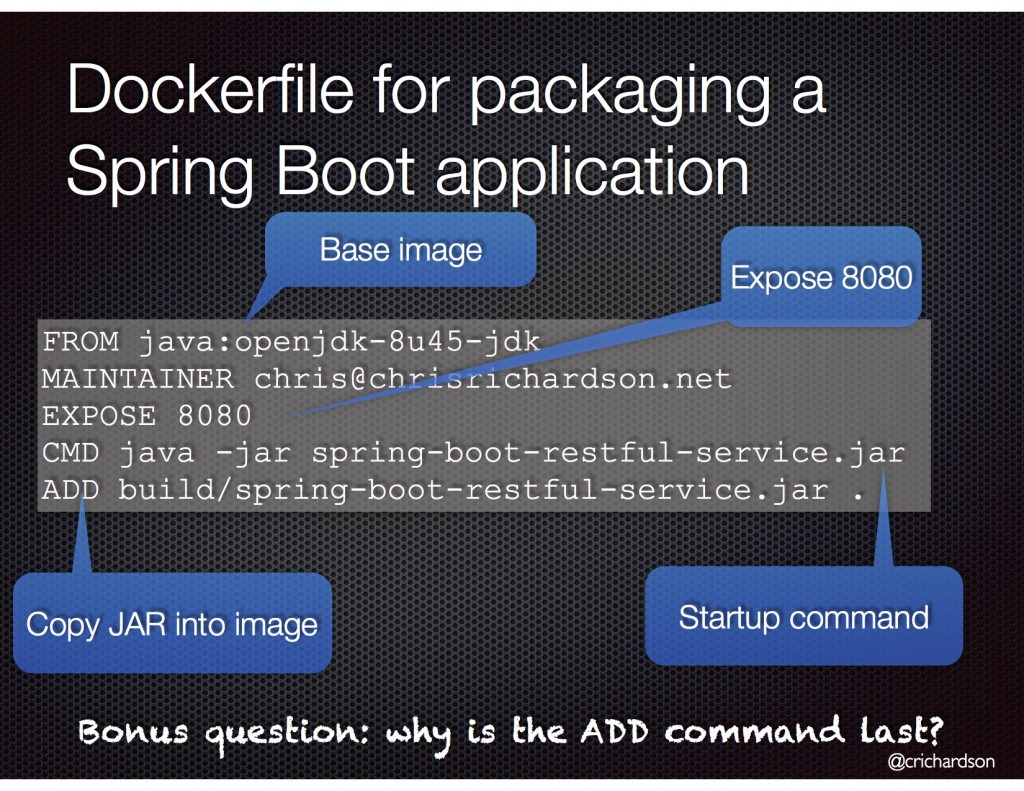

Chris Richardson continues by describing the mechanics of working with Docker images. The first step is preparing a minimum Dockerfile (the recipe), which can be obtained from Docker Hub, a giant community-supported repository of Docker images. Then you need to specify the base image, add the required metadata, indicate what port will be exposed to the outside world, set the commands that will run on start-up, and copy the .jar file into the image that you want to build.

The build process involves creating a new build directory, copying the .jar file created with Gradle into the build directory, and running the -docker build command against it. The directory is then sent to the Docker daemon to build the Docker image.

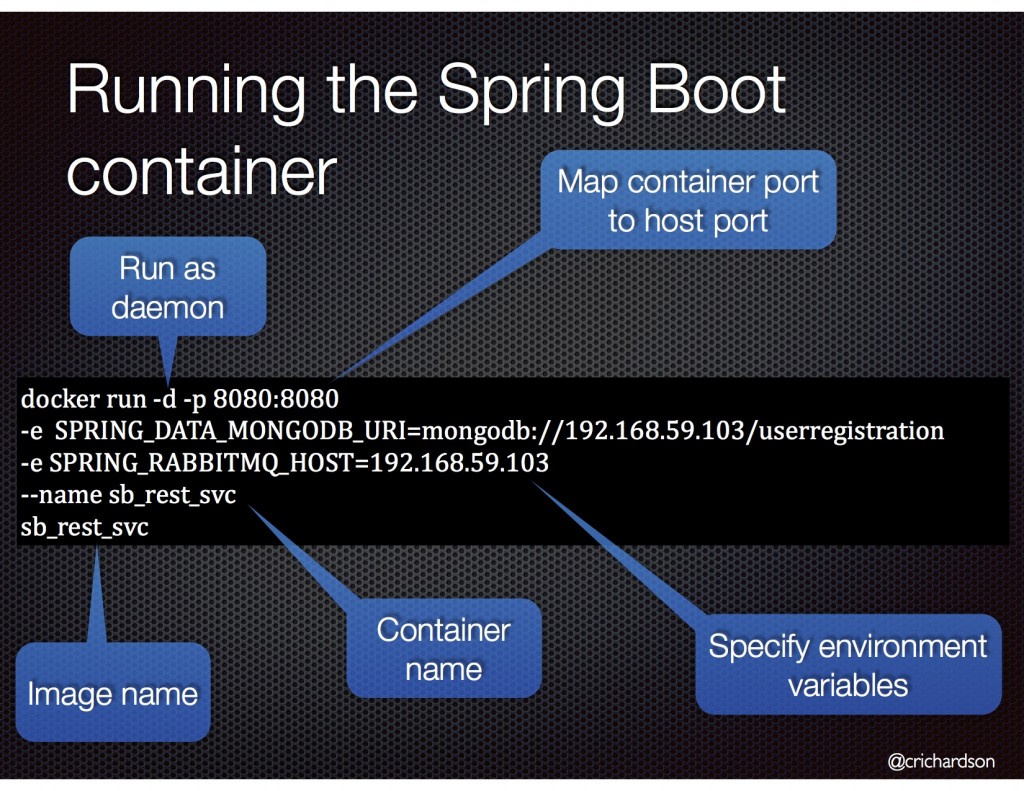

The docker run command is used for running apps packaged as Docker images. This is the step where you can specify how the image will be run, its name, port mapping, etc. One of the cool features provided by Spring Boot is that you can set configuration properties through OS environment variables. This means you can define external config properties inside your Docker container (using the -e argument). For instance, if your app uses MongoDB and RabbitMQ, you will can tell it the coordinates of their servers (see the slide below).

Testing and pushing Docker images to production

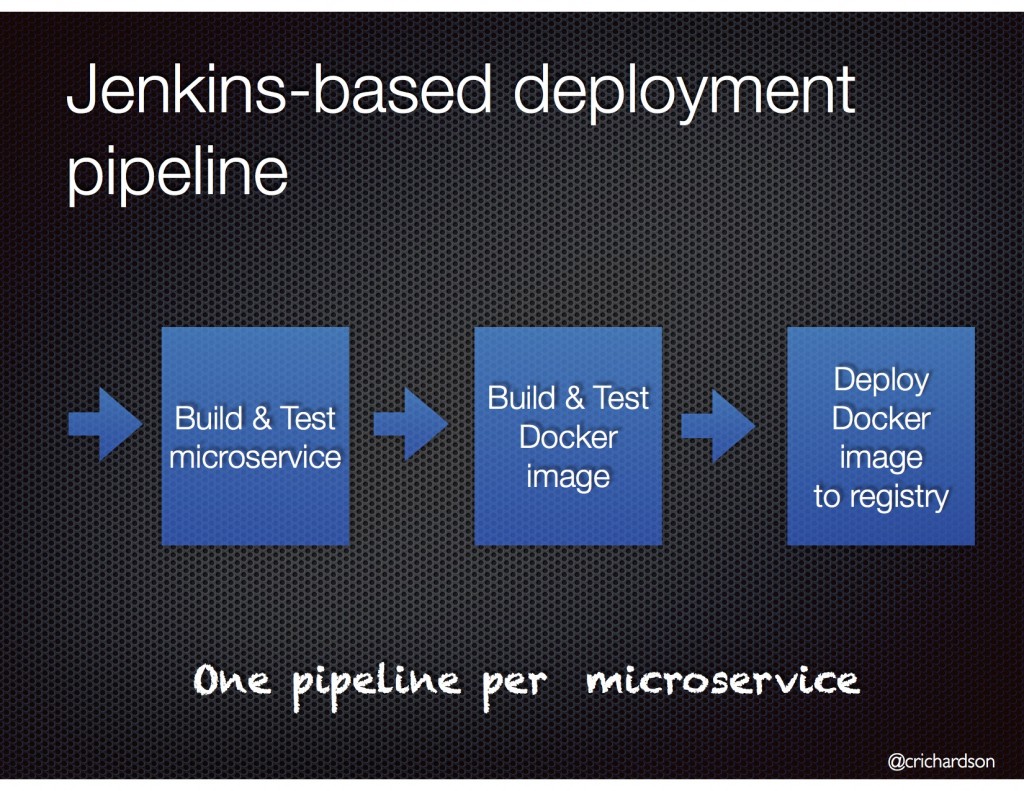

The second half of the talk provides some details about the microservices app built by Chris Richardson using Spring Boot and Docker. The architecture features services written using Scala and Spring Boot and a Node.js-based API gateway. Each of the services uses a Jenkins CI-based build pipeline.

Testing Docker images is fairly straightforward. Thanks to the nice the REST API of the Docker daemon, it is possible to use HTTP to create and start containers. During smoke testing, you start a container, ping an URL, shut the container down, and delete it.

The next step is to push the Docker image to a hosted registry (e.g., Docker Hub). To do that, you need to tag the image with the hostname and port of the registry. The process is nicely optimized thanks to layering and only takes 25 seconds. After the image has been published, you can pull and run it in the production environment. Pulling also takes about 25 seconds.

CI and production environments

The last part of the talk was dedicated to the details of the continuous integration and production environments used by the microservices application.

The CI environment consists of Jenkins and Artifactory, which are running as Docker containers on AWS EC2. A separate EBS volume hosts /jenkins-home, /gradle-home, and /artifactory-home, so that the state is not lost every time the container is restarted. Gradle is also externalized to speed up the build process.

The production environment consists of an EC2 instance. Updates are done with a Python script that finds outdated images in production using the Jenkins API and then updates them by SSH-ing and running docker pull to download and install a new version of Docker.

“It’s like five seconds to build an image, 25 seconds to push it, 25 secs to pull it, and then it takes 10 seconds to start. So, that’s 75 seconds from build completing and it actually running in production.” —Chris Richardson

The bottom line is that Docker containers are a lot faster and more convenient for running microservices than AMIs. Therefore, it is not surprising that Docker is so popular and has already been enabled on several clouds, such as AWS and GCE.

Easier with a PaaS

Microservice architectures enable easy scaling, a possibility to evolve different parts of the system independently, using any languages and frameworks, high availability, and other cool features. What is more important, they enable faster innovation—something that everyone wants. But nothing in this world comes for free. Managing numerous small services and their multiple copies, which you need for failover or to handle large workloads, can be a nightmare.

In addition, this architectural style may lead to increased operational overhead. To address these downsides, you need to provide a high level of automation—e.g., by using a PaaS.

A platform, such as Cloud Foundry, can automate service management, provide containerization, improve server utilization, and take away most of the pain associated with running complex distributed systems.

In a related presentation, this talk by Russ Miles of Simplicity Itself gives a great in-depth overview of how PaaS and microservices fit together. Or, read our guide on microservices, including Cloud Foundry examples.

Want details? Watch the video!

Table of contents |

Further reading

- Docker Containers, Cloud Foundry, and Diego—Why Abstraction Matters

- How to Install Jenkins CI on Cloud Foundry for Continuous Delivery

Relevant slides