The Ways to Streamline Apps and Ops on Azure Kubernetes Service

Identifying your level of adoption

Kubernetes is a container orchestration system designed to run multiple types of cloud-native applications. It has several distributions, including Azure Kubernetes Service (AKS) from Microsoft. Given the size of the platform, organizations running Kubernetes are likely to have differences in the maturity state of their deployment.

In order to understand these differences in states, it is necessary to create a model that gauges multiple factors associated with Kubernetes deployment, such as high availability, cluster security, platform operations, etc. With such a model, companies will be able to determine their current maturity state and also identify areas to improve on. (To learn more about the beginner, intermediate, advanced, and expert levels of Kubernetes maturity, read our blog post on the topic.)

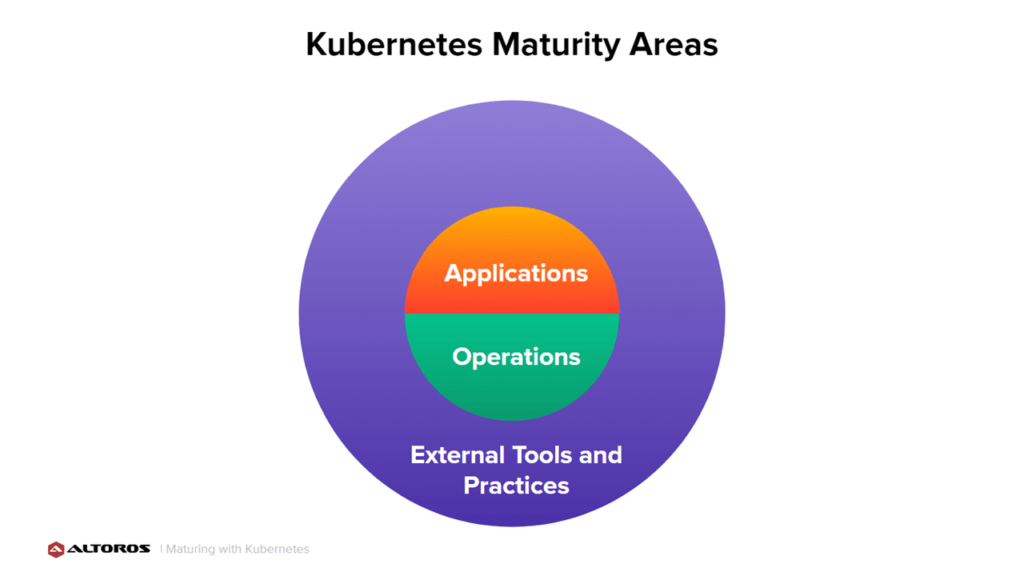

Categories to focus on to accelerate maturity (Image credit)

Categories to focus on to accelerate maturity (Image credit)To improve Kubernetes deployments, organizations will need to focus on three main categories: applications, operations, as well as external tools and practices. Barry Williams, Cloud Solutions Architect at Altoros, overviewed some Kubernetes best practices during a recent webinar. He also demonstrated how deploying an application works with an automated pipeline.

“Progressing across maturity stages is about evaluating technical debt or asking—what payment will give the most bang for the buck?” —Barry Williams, Altoros

This blog post features general best practices from the webinar, as well as recommendations from Microsoft. It explains where to focus on when optimizing your apps, operations, and tools used—to enable automation across the entire software development life cycle.

Improving application maturity

Application maturity focuses on which Kubernetes objects and patterns are used when deploying applications. Below are some best practices under this category.

Configuring resource limits and requests properly improves cluster stability by ensuring applications have the resources to run successfully. The most commonly requested resources are CPU and memory.

Developing apps directly on Kubernetes leads to faster time to market for software features. By building on the platform itself, the development feedback loop is drastically reduced.

Externalizing sensitive configuration decouples application artifacts from their environments, enabling companies to test an artifact once while using it in multiple places. This is done with ConfigMaps and Secrets.

Health checks are essential for gaining insight into application health. These checks enable Kubernetes to automate health-related tasks to improve application performance. For instance, Kubernetes can hold network traffic until an application is ready to receive traffic or restart an application when necessary.

Network and security policies are used to reduce the effects of incorrect or malicious code. Network policies act like a firewall between applications. Pod Security Policies and Security Contexts protect hosts and other containers.

You can read more recommendations for resource management and pod security in Microsoft’s official documentation.

Enhancing operations maturity

The second category, operations maturity, focuses on how Kubernetes is deployed and maintained. There are the following recommendations for this category.

Scheduling optimization enables safer operation of apps. Resource quotas allocate resources for an entire business application. Pod disruption budgets ensure safe rollouts of apps while keeping up with the load. Taints and tolerations separate application workloads when needed.

Container image management governs the artifacts of apps. This involves common vulnerabilities and exposures (CVE) scanning, environment hardening, providing up-to-date security patches, and automatically building new images when base images are updated.

Authentication and authorization secures access to the Kubernetes API. Use service accounts or role-based access control (RBAC) to set permissions, roles, and role bindings for Kubernetes users and groups.

Cluster management involves bringing the latest platform features and stability techniques. These techniques include automated cluster upgrades, cluster autoscaling, platform and application high availability, as well as disaster recovery and business continuity. The documentation on Microsoft also suggests such recommendations to improve cluster management as:

- Bolster network connectivity by using different network models, ingress, and web application firewalls in addition to securing node SSH access

- Optimize storage by choosing the appropriate type and node size. Enable dynamically provisioning volumes and data backups.

- Improve business continuity and disaster recovery through region pairs and geo-replication of container images.

Using external tools and practices

The third category, external tools and practices, involves everything else besides apps and operations that support Kubernetes. This includes continuous integration (CI), continuous delivery (CD), testing, and external services. The following best practices are valid under this category.

Be specific about tools. Although Kubernetes is agnostic to languages and frameworks, picking tools that effectively express desired software features and enable rapid iteration.

Use microservices to allow apps to be separated into a distributed system. This way, application components can be scaled and managed independently from one another.

Testing automation plays a crucial role in attaining velocity. Be sure to incorporate unit, integration, performance, and smoke tests.

Build a CI/CD pipeline. Under CI, artifacts are built and tested. The bulk of testing is performed in CI, making it a gateway for viable features. CD is the fully automated delivery of software and platform features beginning from code commit to production.

Increase observability to gain insights into the apps and platform. Use tools for monitoring, logging, tracing, topology, etc.

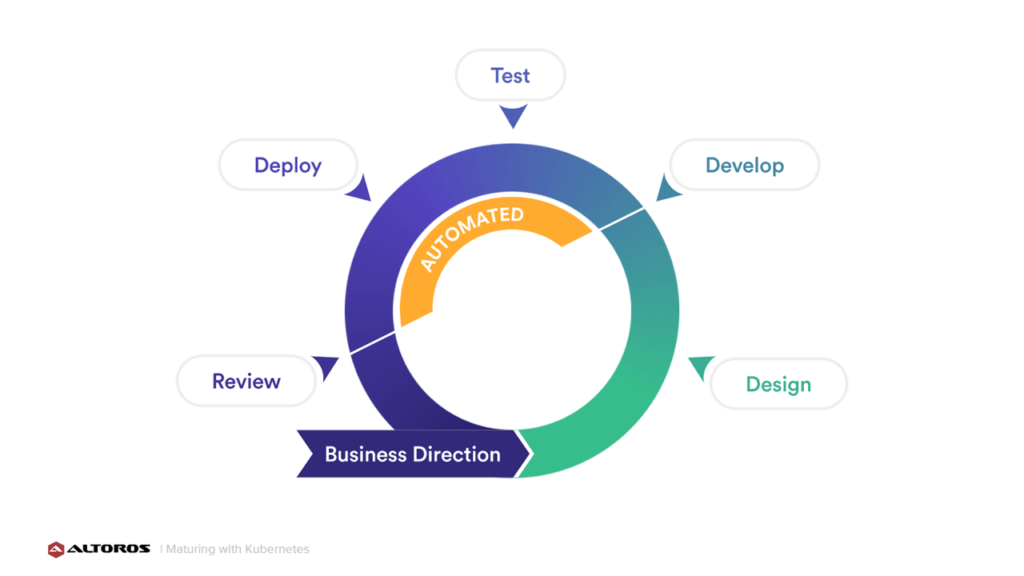

Automation of multiple processes at the expert level (Image credit)

Automation of multiple processes at the expert level (Image credit)“To better understand what can be improved, we need to identify the activities involved in delivering software value. Let’s start with the software development life cycle. Business directions are communicated and translated into a design. This design feeds software development. This software is then tested and deployed. Metrics from the deployment are used to guide business decisions and further software improvements. Then, the process cycles. A company with an expert level of maturity will automate these processes as much as possible.” —Barry Williams, Altoros

Microsoft-specific recommendations

In addition to the recommendations listed above, organizations looking to accelerate their Kubernetes deployments should also adopt best practices suggested by service providers. With microservices becoming a popular architectural style for building apps, Microsoft suggests the following structured approach:

- Model microservices using domain analysis.

- Design domain-driven microservices.

- Identify microservices boundaries.

Since microservices-based architecture is distributed, it is ideal to maintain robust operations with a CI/CD pipeline. According to Microsoft’s official documentation, a CI/CD pipeline can enable:

- teams to build and deploy services that they own without impacting other fellow teams

- developer environments to test versions of new services before being deployed to production

- versions of new services to be deployed in parallel with older versions.

- proper configuration of access control policies

- safe deployments of container images

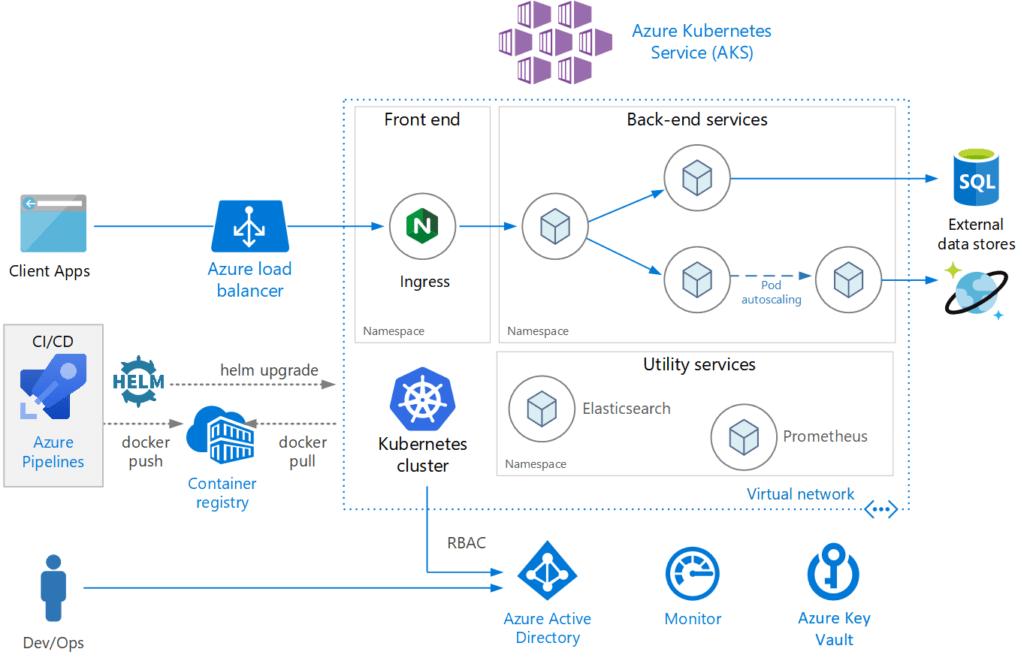

An example of a microservices deployment (Image credit)

An example of a microservices deployment (Image credit)Even with a CI/CD pipeline in place, in a microservices-based architecture, it is still crucial to keep track of everything happening across all services. To do so, Microsoft recommends to collect logs and metrics from each service. Logs are useful for troubleshooting and finding root causes. On the other hand, metrics are good for observing the system in real time to monitor trends.

In AKS, both logging and metrics are easily collected with Azure Monitor. The telemetry tool collects data from microservices and other sources, including applications, operating systems, and the platform itself.

See this in action

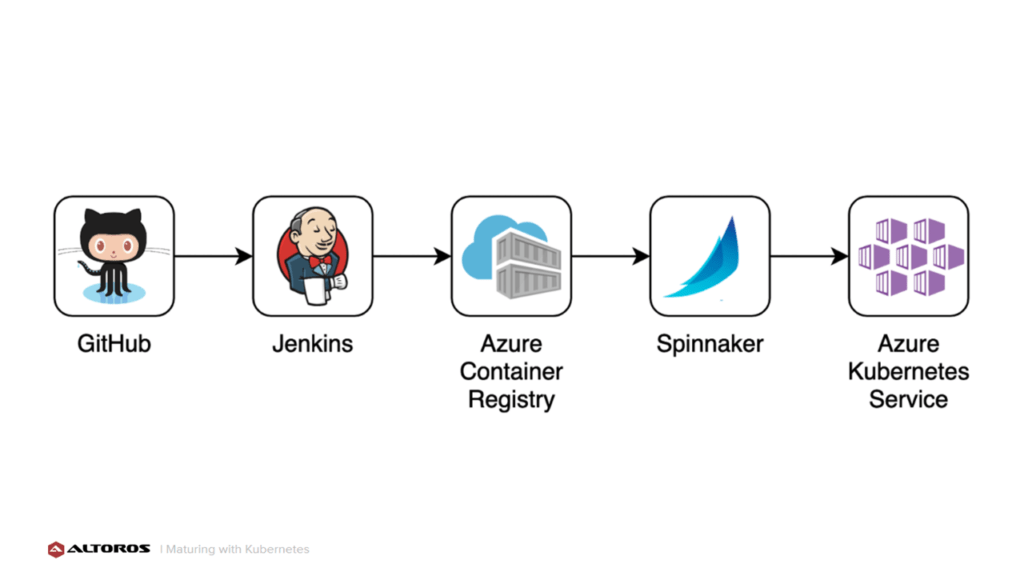

To better understand the value of applying Kubernetes best practices, Barry prepared a demonstration involving app deployment with an automated CI/CD pipeline. The workflow:

- A GitHub pull request brings in new features.

- Jenkins builds a Docker image and pushes it to Azure Container Registry.

- Spinnaker detects the new image and deploys it to AKS.

A workflow of the demo scenario (Image credit)

A workflow of the demo scenario (Image credit)Different pull requests simulated various scenarios to highlight how automation is able to protect the production environment from incorrect code. Barry demonstrated how Jenkins is able to detect errors during testing and stop deployment. If an error is not detected during testing and surfaces only while in production, Spinnaker is able to catch the failure and trigger a rollback.

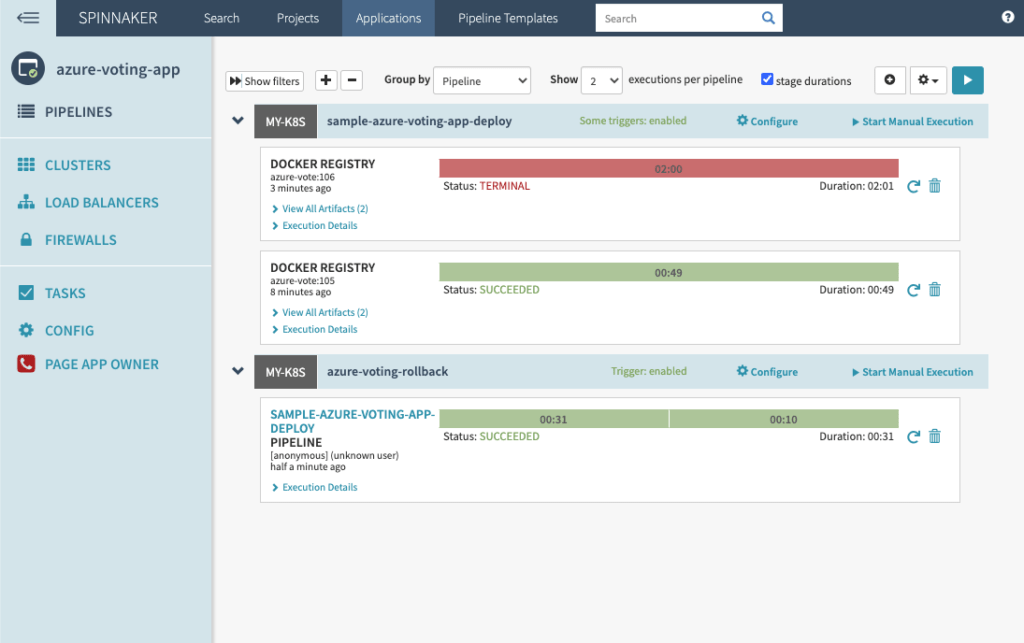

Spinnaker catching the failure in production and rolling back

Spinnaker catching the failure in production and rolling backWith an automated pipeline, the production environment remained up and stable even during pull requests that caused errors. By adopting best practices, having a robust CI/CD pipeline in this case, organizations can ensure safe rollouts without any downtime.

“Being an expert at Kubernetes means the automation of all aspects of software delivery and testing. Ideally, companies should approach maturity from the point of view of a technical debt. Focus on things that bring the largest business value.” —Barry Williams, Altoros

Want details? Watch the webinar or read more about Kubernetes maturity evaluation.

Related slides

Further reading

- Maturity of App Deployments on Kubernetes: From Manual to Automated Ops

- Automating Event-Based Continuous Delivery on Kubernetes with keptn

- Running a Cloud Foundry Spring Boot App on Azure Kubernetes Service (AKS)

About the expert

Barry Williams is Cloud Solutions Architect at Altoros. He has designed and implemented the architecture for microservice applications. Barry focuses on automation in all things and cloud platforms. He is an expert in Kubernetes, Cloud Foundry, Docker, Linux, Concourse CI, ELK Stack, Shell Script, Python, Java, Go, Ruby, Agile, Extreme Programming, etc.