How to Omit Inaccuracy in Receiving Position and Distance Data from GPS

Too many records mean too much traffic

It’s no secret that there are natural inaccuracies in computing objects’ coordinates through GPS. When a receiver stays in a single location for a certain period of time, the system detects a set of different coordinates that lie within 5 to 100 meters from each other, instead of detecting the same coordinate. If this data is sent and saved in the database, it will cause redundancy and inaccuracy.

For example, it may seem that a truck is moving, while in reality it stands still. As a result, the system will be winding mileage. We faced such a challenge when developing a route-tracking module based on the GPS technology for one of the logistics and warehousing applications. We’d like to share the solution found to give you ideas on how to prevent major problems with GPS navigation on Pocket PC devices.

Imagine that a truck is parked overnight, and the driver does not turn off the Pocket PC device with GPS that sends its coordinates to the server. In case the truck stays in a parking lot for 10 hours, and its position is calculated every second, the server will receive 36,000 records overnight! It will cause data redundancy, traffic overload, and total cost increase. If a record requires at least a dozen of kilobytes, irrelevant data sent over night can occupy hundreds of megabytes.

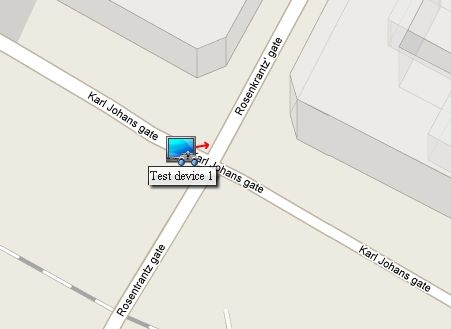

Locating a GPS device

Locating a GPS device

Solution #1: Narrowing down time and distance

At first, we created two parameters that had to be considered for proper position calculation: time and distance. The system was made to ignore any position changes within 50 meters, and the inquiries were sent every 5 seconds. The values of these parameters can be configured, of course, to adjust the level of accuracy. If the difference between the positions was over 50 meters in 5 seconds, the device sent records to the server. If it was less, it waited to send records till the required distance of 50 meters was passed. This did help to compute coordinates with a given accuracy.

This solution worked out, but we still needed more precise calculations of the distance passed by the vehicle. We now knew how to avoid inaccuracies in computing object’s coordinates, but is it going to be enough to omit data redundancy and make our customer happy?

We decided to test the device and left it turned on in the office overnight. We couldn’t help crying out loud the next morning when we walked into the office and looked at the device—it winded over 19 kilometers while just lying on the windowsill. We could not be satisfied with test results, as the application required not only the accurate coordinates, but the precise calculation of distance passed by a vehicle. Now, we needed to apply some other techniques for proper distance calculations, because when the signal reception was poor, the inaccurate data was sent.

Solution #2: Position dilution of precision

As you may know, one of the parameters for GPS navigation is Position Dilution of Precision (PDOP). This is a geometric effect resulting from satellites being too close to each other. When the angle between satellites is narrow, the area within which the receiver lies is greater than when the satellites are well spaced. We have decided to utilize it along with other parameters to improve data accuracy.

Since the satellites move across the sky relatively to the user, the PDOP is always changing. PDOP value varies from 1 to 50. With our device, if PDOP equals 1, the position accuracy can be calculated within 5 meters. If PDOP equals 2, the accuracy is 10 meters, and so on. As you can see, the lower PDOP is better. We configured the GSP module in a way that it ignores position changes, if PDOP is more than 8. Along with two other parameters, it has drastically improved data accuracy.

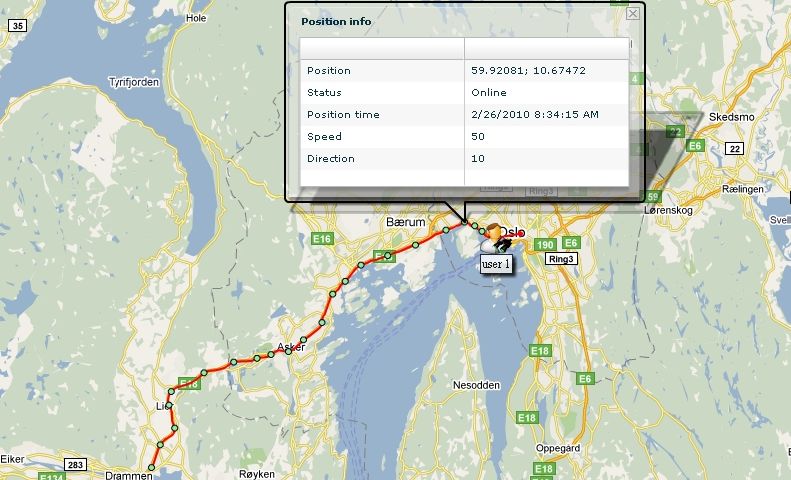

Sample position information

Sample position informationThe GPS device sends relevant data now, and when the reception is good, there are no inaccuracies at all. We tested it again: this time, only one position was recorded to the database, when the receiver stayed at one place. For logistics and warehousing companies, it means that their databases are not overloaded with redundant data. It helps to save resources, ensure proper system operation, and cut down maintenance costs.