Using Toshiba’s KumoScale Flash-as-a-Service Storage on Kubernetes

Storage for StatefulSets

StatefulSet workloads in Kubernetes require a persistent storage, which is provided by PersistentVolumes. As pods are rescheduled or restarted, their connections to PersistentVolumes are also migrated in order to keep any configurations intact.

Storage for PersistentVolumes can either be provided by local or network drives. Local drives have better overall performance, while network drives provide flexibility in terms of moving storage around and scaling. In addition, Kubernetes has a limitation, where there is no dynamic provisioning for a local storage. Without dynamic provisioning, pods cannot request storage on-demand.

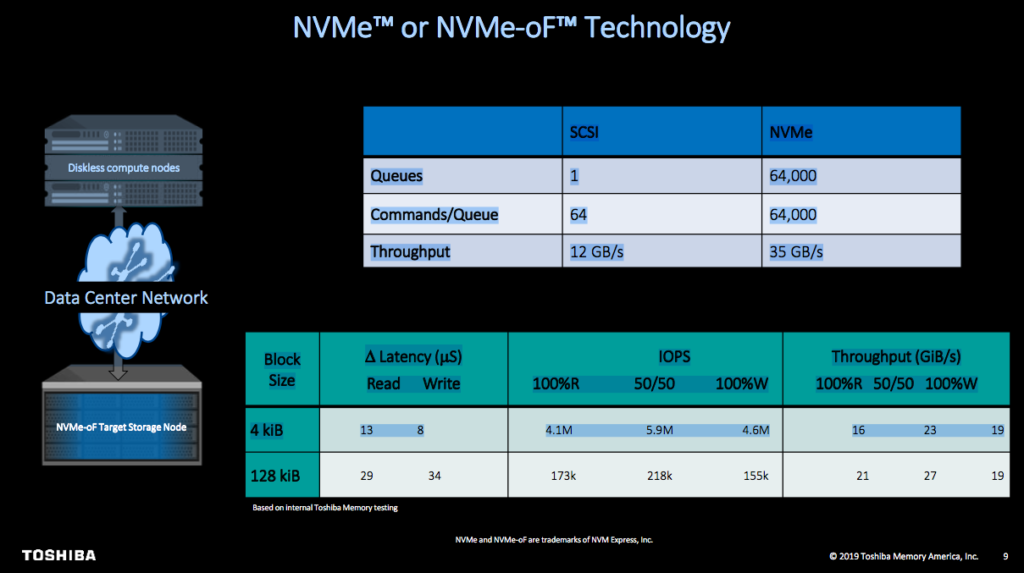

Kubernetes deployments typically utilize a cloud storage, meaning most pods rely on a network storage at the cost of performance. However, with the non-volatile memory express (NVMe) storage, the performance loss while on a network is negligible.

NVMe storage performance over a network (Image credit)

NVMe storage performance over a network (Image credit)At a recent Kubernetes meetup, Amarjit Singh of Toshiba explained how to use the NVMe storage via the Kubernetes container storage interface (CSI) driver to get the best of both types of storage, as well as enjoy dynamic provisioning.

“With NVMe over the network, you get performance of a local storage and the flexibility of a remote storage. That’s what you need with StatefulSets.”

—Amarjit Singh, Toshiba

Accelerating with KumoScale NVMe-oF

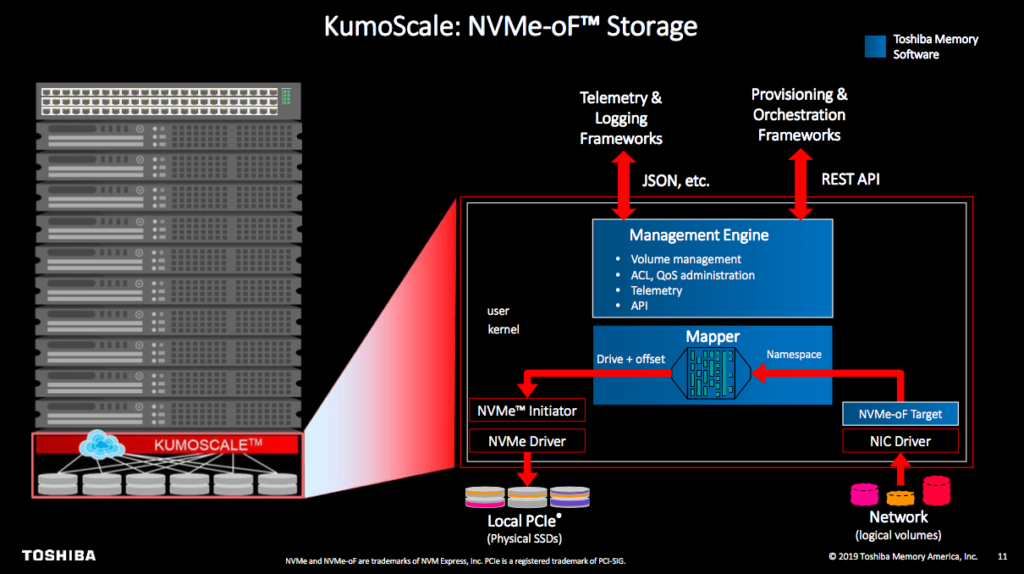

KumoScale NVMe-oF is Toshiba’s software for implementing networked block storage services. Based on the NVMe over Fabrics standard, KumoScale NVMe-oF optimizes the performance of NVMe drives over a network through disaggregation.

The KumoScale architecture (Image credit)

The KumoScale architecture (Image credit)“KumoScale provides storage over the network to multiple hosts. In this case, we used the CSI driver to connect KumoScale to the Kubernetes pods.” —Amarjit Singh, Toshiba

With the CSI drivers, plug-ins can be written for any storage to be used by PersistentVolumes. Connecting an NVMe storage with the CSI driver makes it possible for StatefulSet workloads in Kubernetes to gain the benefits of both a local and a network storage.

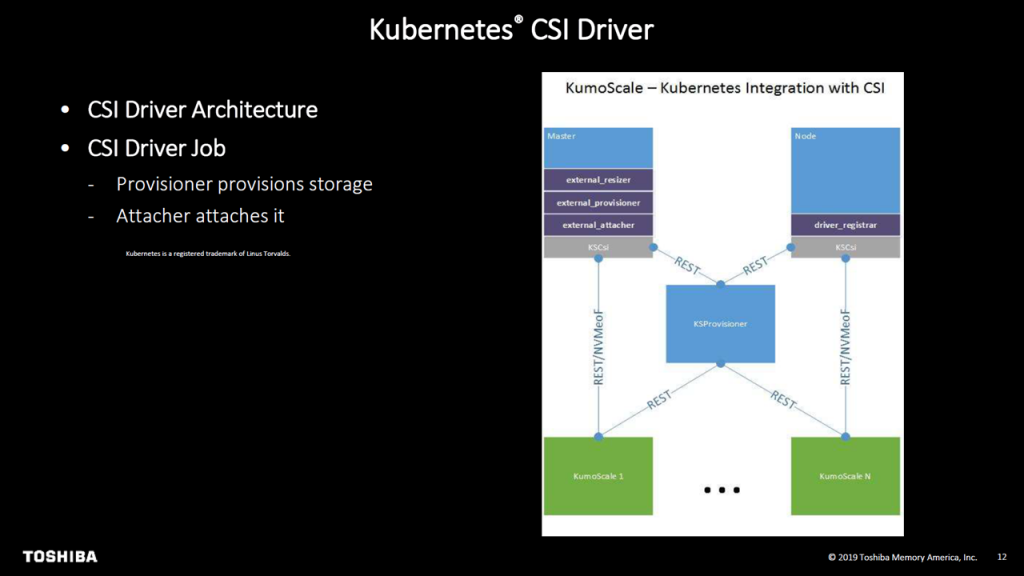

Accessing NVMe from Kubernetes via the CSI driver

The Kubernetes CSI driver is a standard for exposing arbitrary block and file storage systems. This driver enables users to write and deploy their own storage plug-ins in Kubernetes.

Amarjit briefly detailed one of the Toshiba’s test implementations with their KumoScale NVMe over Fabrics (NVMe-oF) using the CSI driver. “The CSI driver in Kubernetes talks to the central provisioner, which then provisions storage on the network. This storage is then assigned to the pods,” he noted. “This all happens over the REST API commands.”

The Kubernetes CSI driver architecture (Image credit)

The Kubernetes CSI driver architecture (Image credit)Once a CSI storage plug-in is created, users can enable dynamic provisioning by creating a StorageClass, which points to the plug-in.

“The CSI driver is a standard which anyone can use to write a driver for. At the high level, it talks to the storage in the back end and attaches it to the pods.” —Amarjit Singh, Toshiba

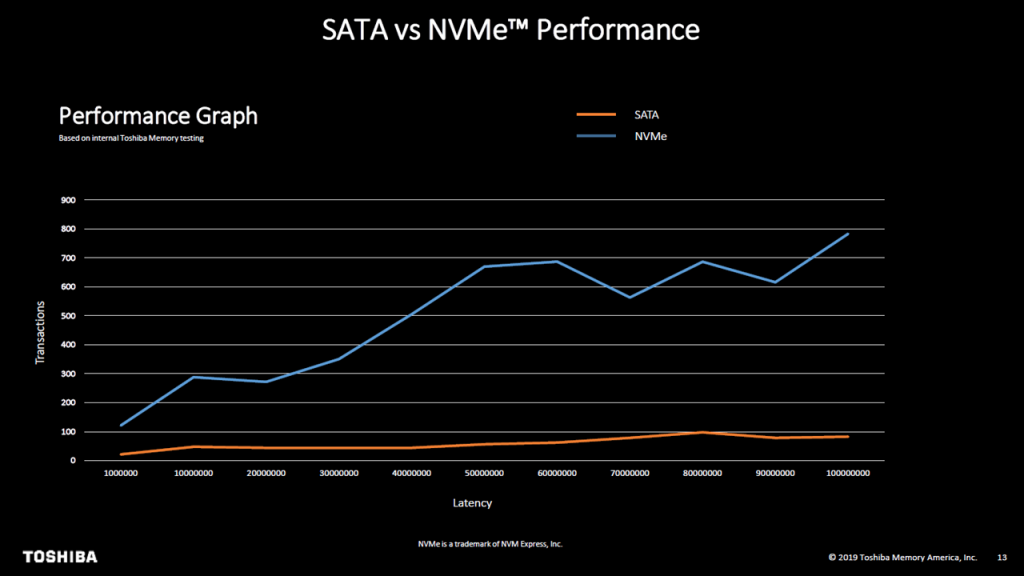

Amarjit also showed the results of Toshiba’s internal tests comparing the difference between local SATA drives and NVMe over a network. The test measured the performance of the two storage types when reading and writing 100,000,000 transactions in Cassandra.

SATA and NVMe performance comparison (Image credit)

SATA and NVMe performance comparison (Image credit)According to Amarjit, “local SATA drives will experience a performance degradation, while network-based NVMe drives does not degrade. If you have multiple StatefulSet applications, they get the same performance with NVMe.”

Want details? Watch the video!

- What are StatefulSets? (1’33”)

- What are

PersistentVolumes? (2’25”) - The difference between a local and a network storage (4’11”)

- How has storage evolved over the years? (6’30”)

- NVMe performance on a network (9’10”)

- What is the Kubernetes CSI driver? (11’45”)

- Demo: The NVMe storage via the Kubernetes CSI driver (16’18”)

- Questions and answers (30’10”)

These are the slides presented at the meetup.

Further reading

- Considerations for Running Stateful Apps on Kubernetes

- Enabling Persistent Storage for Docker and Kubernetes on Oracle Cloud

- Kubernetes Networking: How to Write Your Own CNI Plug-in with Bash

About the expert