Multi-Datacenter as a Cloud Foundry Deployment Pattern

The accelerated adoption of multi-datacenter environments in the recent time is not accidental. Addressing diverse needs of modern enterprises, they mitigate the risk of data loss or downtime and bring benefits of different clouds to the table. At the same time, multi-datacenter governance is quite complex and requires a high level of expertise. The article focuses on a multi-datacenter model for Cloud Foundry deployments, its implementation, and challenges on the way.

Why multiple data centers?

Companies select multiple data center deployments for a number of reasons. In many cases, the logic behind the decision includes:

- Optimizing fault tolerance through redundancy

- Bringing applications and data closer to end users

- Reducing dependence on a single vendor

- Complying with security and legal requirements

The approach is especially relevant today for globally distributed enterprises striving for effective workload management. Among well-known proponents of operating Cloud Foundry across multiple data centers are Warner Music Group, Fidelity, Comic Relief, and more.

Cloud Foundry deployment patterns

Users have several options for how to install and run Cloud Foundry. In terms of the platform deployment, commonly implemented architectural solutions are:

- a single installation in one data center

- a single installation split across multiple availability zones within a data center

- multiple installations in multiple data centers

Multiple data centers for the PaaS

Managing two separate Cloud Foundry deployments—either active-passive or active-active—in data centers that can be served by different infrastructure providers is not so rare today. For this scenario, teams typically replicate a Cloud Foundry installation and deploy all applications to each location. Traffic is then load balanced between the environments.

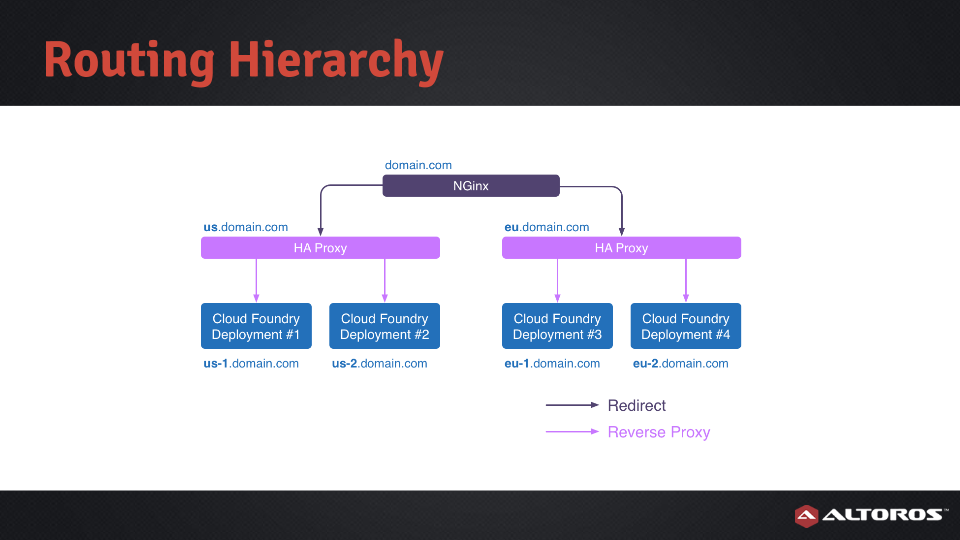

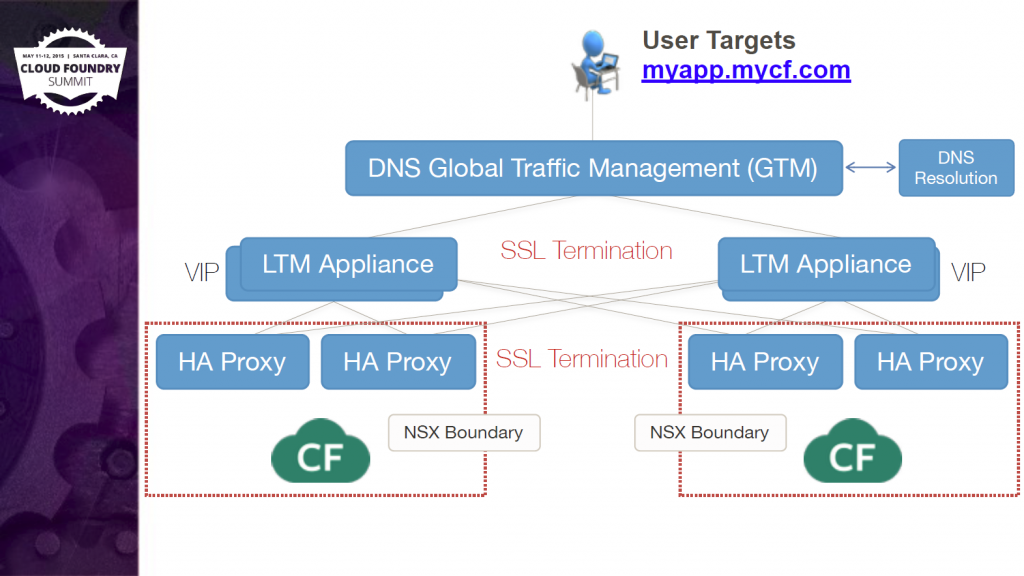

The example below represents traffic flow in a dual Pivotal CF deployment.

Source: Pivotal

Source: PivotalWhen working with multiple Cloud Foundry deployments, geolocation-based load distribution can be used to redirect requests to the physically closest cluster.

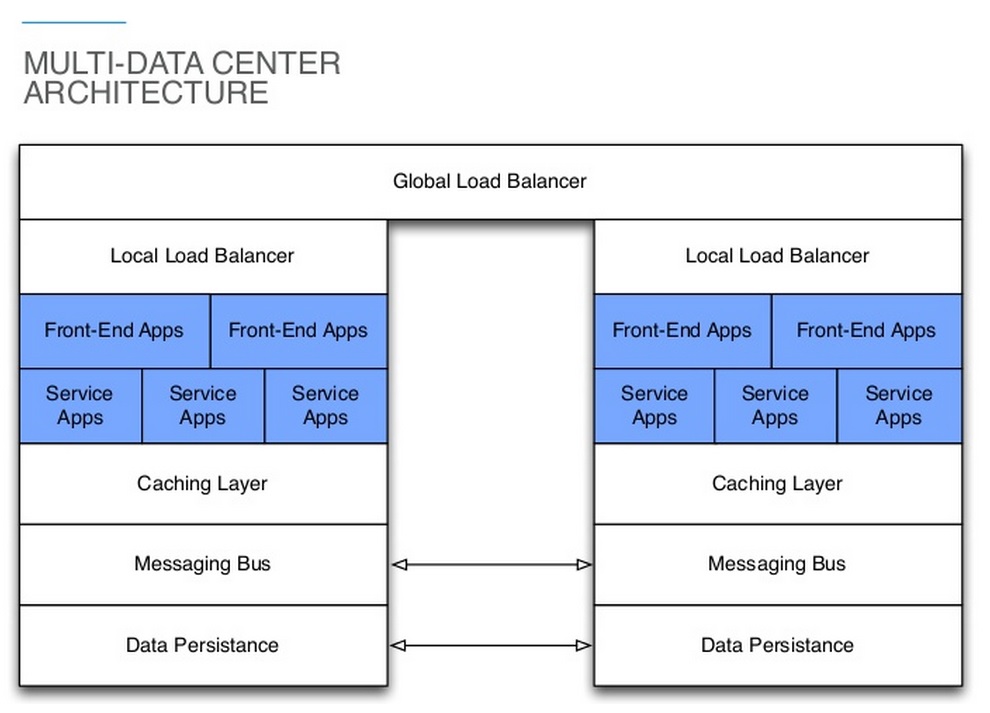

In an earlier version of multiple data center design for CF v1, Warner Music chose architecture composed of individual Cloud Foundry deployments (highlighted in blue on the illustration below) with Cassandra responsible for data persistence. Within each data center, front-end and service applications were separated between different Cloud Foundry clusters.

Source: Warner Music

Source: Warner MusicThe separation based on application intent—public, internal, or private—let the company to avoid complex configuration of load balancing.

Be ready for multiple data centers

With all the advantages of a multi-datacenter deployment, operating Cloud Foundry across different environments brings challenges. Among important things to take care of when implementing such a scheme are:

- Automating Cloud Foundry deployment

- Automating and synchronizing application deployment

- Synchronizing data services

- Synchronizing service brokers

To orchestrate the Cloud Foundry life cycle across multiple data centers, you usually need at least one BOSH installation in each of them. With a certain amount of work, there is also an option of central management through a single BOSH deployment; however, security concerns might be an obstacle here. We are going to discuss the tradeoffs of both methods in an upcoming publication.

Related readings

- Cloud Foundry: Infrastructure Options

- Use Case: Warner Music Builds Software Factory with Cloud Foundry